This post is a response to this month's T-SQL Tuesday prompt. T-SQL Tuesday was created by Adam Machanic and is a way for SQL users to share ideas about interesting topics. This month's topic is Fixing Old Problems with Shiny New Toys.

Why I love JSON

I know that lots of users in the SQL community cringe when they hear about JSON, NoSQL, and other non-relational database storage options. I get it. Lots of times a string storage format compromises the many benefits SQL has to offer: indexing, data integrity, simple querying, oversight into schema changes by developers, etc… I've seen (and written) some queries that perform like molasses in winter solely due them being in a non-sargable string format.

Yet, with all of those downsides I still love JSON. I think there's a happy middle ground where DBAs and developers can agree on when it is appropriate to use JSON. Would I ever want to use JSON in a data warehouse that is being heavily queried for analytics? No. Would I also want to use JSON where performance is extremely important? No. Would I want to use it in an application where data or schema integrity is extremely important? No.

However, I love using JSON when capturing dynamically structured user input, especially if the database is being used only to persist the data instead of analyze it. I love having JSON in the database because it so easily serializes/deserializes between JSON and my C# models. If I want low latency to my webapp, I also might write the JSON from the app to the database and then have it get queued to transform into normalized data by an ETL later on. These reason in particular are what make me incredibly excited for the new JSON functionality in SQL Server 2016.

Okay, I guess there are some valid uses for JSON. What are my options for using JSON in SQL Server?

Before SQL Server 2016 was released there was no support for JSON in SQL Server. None. Zilch. Zero. The best thing we had was Phil Factor's amazing JSON parsing user defined function or writing your own CLR.

Phil's function is truly amazing and it cleverly uses some built-in SQL functionality to deserialize almost any type of JSON string you can throw at it. It does however have downsides — the function has a few edge case bugs (try adding a space before any ":" in your JSON), it does not allow for easy querying of the JSON, and the function needs to be added to any database you want to use it on.

I'm not trying to downplay Phil's function — I love it and use it in a lot of my code — but the fact of the matter is that it is a very clever workaround for something that Microsoft wasn't supporting at the time.

So what did JSON parsing look like in the pre-2016 world? Let's take a look at some example data I generated using http://www.json-generator.com/:

-- This version of that data has new lines removed to save space.

-- Please see https://gist.github.com/bertwagner/965acde93706a9a5d772509e56247a1c for the neatly formatted version of the data.

DECLARE @WebsiteJson nvarchar(max) = '{ "Users": [ { "_id": "589f14e8427b0030d59615b1", "index": 0, "guid": "4a5ecd66-8c41-4553-9c1b-7597124b46e3", "isActive": true, "balance": "$1,418.71", "picture": "http://placehold.it/32x32", "age": 26, "eyeColor": "brown", "name": "Hanson Larson", "gender": "male", "company": "KENEGY", "email": "[email protected]", "phone": "+1 (804) 447-3852", "address": "698 Durland Place, Hachita, Louisiana, 1920", "about": "Consectetur laboris sunt proident ullamco ex excepteur duis cillum sit dolor occaecat officia. Sunt amet cupidatat enim mollit esse non minim dolore ullamco minim duis do. Sunt sint aliqua sit excepteur anim proident consequat magna reprehenderit laborum. Voluptate officia et duis sit laborum.\r\n", "registered": "2016-11-09T10:59:38 +05:00", "latitude": -72.754583, "longitude": 15.975401, "tags": [ "culpa", "excepteur", "adipisicing", "reprehenderit", "eiusmod", "officia", "incididunt" ], "friends": [ { "id": 0, "name": "Blake Dodson" }, { "id": 1, "name": "Marta Bullock" }, { "id": 2, "name": "Benson Soto" } ], "greeting": "Hello, Hanson Larson! You have 7 unread messages.", "favoriteFruit": "apple" }, { "_id": "589f14e8547a192d7a11a174", "index": 1, "guid": "a3c6533b-4c50-4054-9517-a03e8aa9c9ec", "isActive": true, "balance": "$3,450.53", "picture": "http://placehold.it/32x32", "age": 28, "eyeColor": "green", "name": "Wood Douglas", "gender": "male", "company": "FARMAGE", "email": "[email protected]", "phone": "+1 (819) 515-3319", "address": "113 Dean Street, Kylertown, Massachusetts, 1666", "about": "Sunt laborum duis pariatur qui esse velit dolore mollit magna aute. Eiusmod velit dolor nostrud dolor culpa labore duis eiusmod sunt Lorem. Adipisicing eu minim voluptate veniam quis eu laboris dolore ipsum. Voluptate deserunt velit qui aliqua cillum. Voluptate in sit mollit irure velit fugiat dolore minim commodo. Commodo ea aliquip cupidatat commodo consequat ipsum laboris. Excepteur velit voluptate laborum dolor officia ullamco.\r\n", "registered": "2015-09-22T11:15:47 +04:00", "latitude": -88.972896, "longitude": 35.988156, "tags": [ "tempor", "in", "adipisicing", "nostrud", "officia", "cupidatat", "occaecat" ], "friends": [ { "id": 0, "name": "Vivian Gates" }, { "id": 1, "name": "Quinn Fitzgerald" }, { "id": 2, "name": "Jenifer Lowery" } ], "greeting": "Hello, Wood Douglas! You have 2 unread messages.", "favoriteFruit": "banana" }, { "_id": "589f14e8e9c6c96cd1f0b548", "index": 2, "guid": "4c71a47a-8212-46aa-97e3-bf8c136c49f5", "isActive": true, "balance": "$2,118.35", "picture": "http://placehold.it/32x32", "age": 27, "eyeColor": "green", "name": "Copeland Wiley", "gender": "male", "company": "OPTYK", "email": "[email protected]", "phone": "+1 (801) 416-3699", "address": "824 Bristol Street, Sparkill, Delaware, 1706", "about": "In veniam elit nisi reprehenderit adipisicing veniam magna. Veniam proident commodo deserunt minim eiusmod commodo aliquip voluptate in occaecat id cillum fugiat. Excepteur magna adipisicing officia qui sit dolor nisi pariatur anim excepteur.\r\n", "registered": "2016-09-27T03:54:01 +04:00", "latitude": -52.752953, "longitude": -119.42916, "tags": [ "excepteur", "labore", "irure", "voluptate", "enim", "commodo", "sint" ], "friends": [ { "id": 0, "name": "Harding Sanford" }, { "id": 1, "name": "Tessa Colon" }, { "id": 2, "name": "Washington Jordan" } ], "greeting": "Hello, Copeland Wiley! You have 7 unread messages.", "favoriteFruit": "strawberry" }, { "_id": "589f14e857f47268893121d0", "index": 3, "guid": "1ba95a0c-7559-4fdd-b52a-94f5d2c76ca0", "isActive": true, "balance": "$1,955.54", "picture": "http://placehold.it/32x32", "age": 20, "eyeColor": "green", "name": "Kathleen Bradley", "gender": "female", "company": "PASTURIA", "email": "[email protected]", "phone": "+1 (916) 463-3527", "address": "512 Holmes Lane, Chaparrito, District Of Columbia, 1651", "about": "Ea nostrud minim exercitation commodo laborum. Minim irure est sint adipisicing. Voluptate nisi fugiat qui et quis sint nisi ea deserunt laboris est dolor cillum. Ad sit duis nisi culpa aliquip incididunt.\r\n", "registered": "2015-07-20T03:11:35 +04:00", "latitude": -9.512905, "longitude": -108.671577, "tags": [ "eiusmod", "enim", "tempor", "amet", "reprehenderit", "sit", "ut" ], "friends": [ { "id": 0, "name": "Rowe Crawford" }, { "id": 1, "name": "Ginger Bray" }, { "id": 2, "name": "Nichole Bradford" } ], "greeting": "Hello, Kathleen Bradley! You have 6 unread messages.", "favoriteFruit": "banana" }, { "_id": "589f14e8128844a31ccb82e2", "index": 4, "guid": "03e2341e-da4d-48bb-8d6f-006234835828", "isActive": false, "balance": "$1,827.38", "picture": "http://placehold.it/32x32", "age": 32, "eyeColor": "brown", "name": "Colon Mays", "gender": "male", "company": "FREAKIN", "email": "[email protected]", "phone": "+1 (919) 555-3410", "address": "627 Reed Street, Taft, Texas, 1724", "about": "Ullamco magna tempor dolore enim veniam aute incididunt. Deserunt nostrud fugiat reprehenderit consequat deserunt. Labore consequat magna pariatur amet mollit aute reprehenderit ea dolore exercitation anim.\r\n", "registered": "2014-06-08T02:13:34 +04:00", "latitude": 35.186997, "longitude": -1.722535, "tags": [ "cillum", "amet", "ad", "enim", "tempor", "amet", "est" ], "friends": [ { "id": 0, "name": "Gladys Rojas" }, { "id": 1, "name": "Luann Craft" }, { "id": 2, "name": "Camille Gibson" } ], "greeting": "Hello, Colon Mays! You have 7 unread messages.", "favoriteFruit": "apple" } ] }';

Basically we have a JSON string that represents five users and some of their attributes (note: I would argue that storing this much data about an individual would be better suited to fit into some structured tables, but alas this was the type of data that was easy to generate for example purposes).

If I wanted to parse out each user's name to use for an infrequently ran report or for ETL purposes, this is what my query would have to look like:

SELECT

NAME,

StringValue,

ValueType

FROM

(

SELECT

[NAME],

StringValue,

ValueType,

LAG([NAME],1,0) OVER (ORDER BY element_id, sequenceNo, parent_ID) as PreviousKey

FROM

Sandbox.dbo.parseJSON(@WebsiteJson) -- This is Phil Factor's JSON parsing function: https://www.simple-talk.com/sql/t-sql-programming/consuming-json-strings-in-sql-server/

)d

WHERE

PreviousKey <> 'id'

and [NAME] = 'name'

There are a couple funny things we have to do in pre-2016 SQL to parse this JSON:

- We have to add the

parseJson()function to our database - Since there are multiple key's named "NAME", we need to add a

LAG()function to help identify the property's key so we can ensure we have the correct one. - Nested queries required since windowed functions can't run in the WHERE clause.

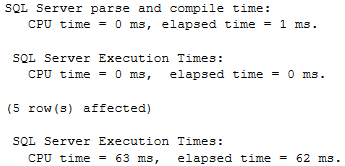

Overall this code works and it gets the job done. However we need to ensure our server has the parseJson( function added and filtering our JSON data to pull out the properties we are interested in takes a little bit of work. Additionally, the parseJson() function does do some heavy processing which affects our overall performance:

The Shiny New Toys in SQL Server 2016: JSON Functions

SQL Server 2016 includes many new JSON parsing functions that are available for us to use. Writing a query to return the same result set as above is now as simple as this:

SELECT

JSON_VALUE(value, '$.name')

FROM

OPENJSON(@WebsiteJson,'$.Users')

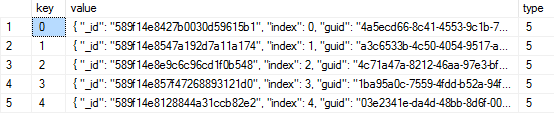

The OPENJSON() function allows us to write an XPath query to filter the JSON Users object into five separate rows of data, one per user:

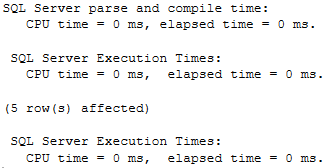

Then, we use the JSON_VALUE() function and XPath once again to filter out just the "name" property. Overall, this code is much simpler to write, performs more consistently (the issue with a space character before a ":" is handled correctly), and performs much quicker:

I love the syntax of the new SQL JSON parsing functions: it's easy to remember, easy to use in real life scenarios, and is very fast. This is one new feature of SQL Server 2016 that definitely makes my life easier and makes any other ways of parsing out JSON data obsolete.

If you are interested in learning more about JSON in SQL server, I've been blogging the past few weeks about how to use all of SQL's new JSON functions.

There's also one bug that I found with how SQL handles JSON deletes that I submitted to Microsoft Connect if you want to upvote and see JSON continue to evolve on SQL Server.