Watch this week's video on YouTube

This week I want to share something that surprised me about using SQL Server's SET IDENTITY_INSERT statement.

I started with two tables with identity columns defined:

CREATE TABLE dbo.[User]

(

Id int identity,

UserName varchar(40)

);

CREATE TABLE dbo.StupidQuestions

(

Id bigint identity,

UserId int,

Question varchar(400)

);

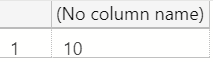

INSERT INTO dbo.[User] (UserName) VALUES ('Jim');

INSERT INTO dbo.[User] (UserName) VALUES ('Jane');

INSERT INTO dbo.[User] (UserName) VALUES ('Jin');

INSERT INTO dbo.[User] (UserName) VALUES ('Joyce');

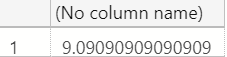

INSERT INTO dbo.StupidQuestions (UserId,Question) VALUES (1,'Is smooth peanut butter better than chunky?');

INSERT INTO dbo.StupidQuestions (UserId,Question) VALUES (1,'Do I really need to backup my production databases?');

INSERT INTO dbo.StupidQuestions (UserId,Question) VALUES (2,'How to grant developers SA access?');

INSERT INTO dbo.StupidQuestions (UserId,Question) VALUES (3,'I''m getting an error about not being able to add any more indexes to my table - how do I increase the limit?');

INSERT INTO dbo.StupidQuestions (UserId,Question) VALUES (4,'How can I include more than 32 columns in my index key?');

GO

I wanted to copy the data from these two tables into two other tables:

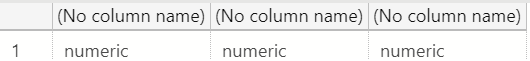

CREATE TABLE dbo.User_DEV

(

Id int identity,

UserName varchar(40)

);

CREATE TABLE dbo.StupidQuestions_DEV

(

Id bigint identity,

UserId int,

Question varchar(400)

);

This would allow me to safely test some changes on these _DEV table copies without breaking my original tables.

The next step was to write a couple of INSERT INTO SELECT statements:

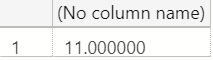

INSERT INTO dbo.User_DEV

SELECT Id,UserName FROM dbo.[User]

INSERT INTO dbo.StupidQuestions_DEV

SELECT Id,UserId,Question FROM dbo.StupidQuestions

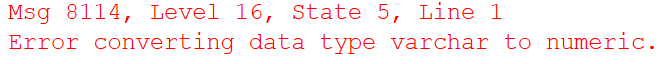

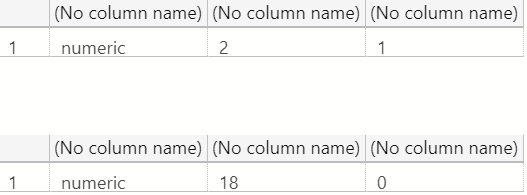

And of course as soon as I executed them SQL Server threw an error stating that I can't INSERT data into tables containing identity columns without first enabling identity inserts:

An explicit value for the identity column in table 'dbo.User_DEV' can only be specified when a column list is used and IDENTITY_INSERT is ON.

Ok, simple enough to fix: we just need to do what the error message says and SET IDENTITY_INSERT ON for both tables:

SET IDENTITY_INSERT dbo.User_DEV ON;

SET IDENTITY_INSERT dbo.StupidQuestions_DEV ON;

And... it still didn't work:

IDENTITY_INSERT is already ON for table 'IdentityTest.dbo.User_DEV'. Cannot perform SET operation for table 'dbo.StupidQuestions_DEV'.

One at a time

Although I've probably moved data around like this hundreds (thousands?) of times before, I've never encountered this particular error.

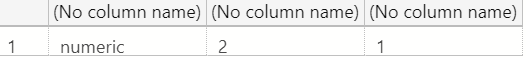

Apparently SQL Server only allows one table to have the IDENTITY_INSERT property enabled at a time within each session. The solution therefore is straightforward: enable identity inserts and copy each table's data one at a time:

SET IDENTITY_INSERT dbo.User_DEV ON;

INSERT INTO dbo.User_DEV (Id,UserName)

SELECT Id,UserName FROM dbo.[User];

SET IDENTITY_INSERT dbo.User_DEV OFF;

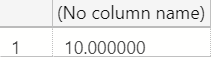

SET IDENTITY_INSERT dbo.StupidQuestions_DEV ON;

INSERT INTO dbo.StupidQuestions_DEV (Id,UserId,Question)

SELECT Id,UserId,Question FROM dbo.StupidQuestions

SET IDENTITY_INSERT dbo.StupidQuestions_DEV OFF;

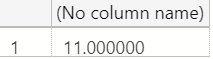

20/20

In hindsight, I think I've never encountered this error before because I normally use the the Export Data Wizard in SSMS or a dedicated SSIS package to move data around. Either of those options are typically easier than writing T-SQL to move data across servers or for repeatability for when I need to regularly refresh tables with test data.

However, when using either of those options I've never paid attention to the implementation details, causing me to assume I knew how SQL Server handles identity inserts.