Watch this week's video on YouTube

Over the past several week's I've been exploring ways to rewrite queries to improve execution performance.

I learned a lot of these techniques over time from trial an error, attending presentations, reading blog posts, speaking to other dbas and developers, etc... but never knew of a good resource that summarized these techniques in one place.

This post will be a quick round-up of everything I've covered so far, as well as 8 additional techniques that I use occasionally but don't necessarily require a full detailed post to explain them.

Why Rewrite Queries?

I often find myself working in environments where modifying indexes or changing server settings is out of the question when performance tuning. I usually run into these scenarios when dealing with:

- Vendor databases

- "Fragile" systems

- Not enough disk space

- Limited tooling/ad hoc analysis

- Features limited by security software

While solving the root cause of a performance problem is always preferable, sometimes the only way I'm able to fix problems in these environments is by rewriting the queries.

I decided to write this summary post because it is a resource I would have loved to have when starting out. Sometimes it can be easy to get "writer's block" when trying to think of ways to rewrite a SQL query, so hopefully this list of techniques can provide ideas and get your creative juices flowing.

So, without further ado, here is a list of 12 techniques in no particular order that you can use to rewrite your queries to change their performance.

12 Ways to Refactor a Query to Change Performance

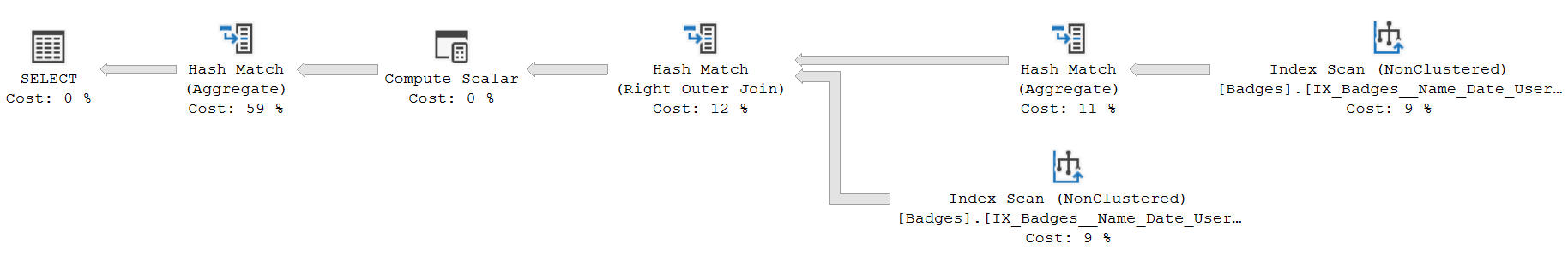

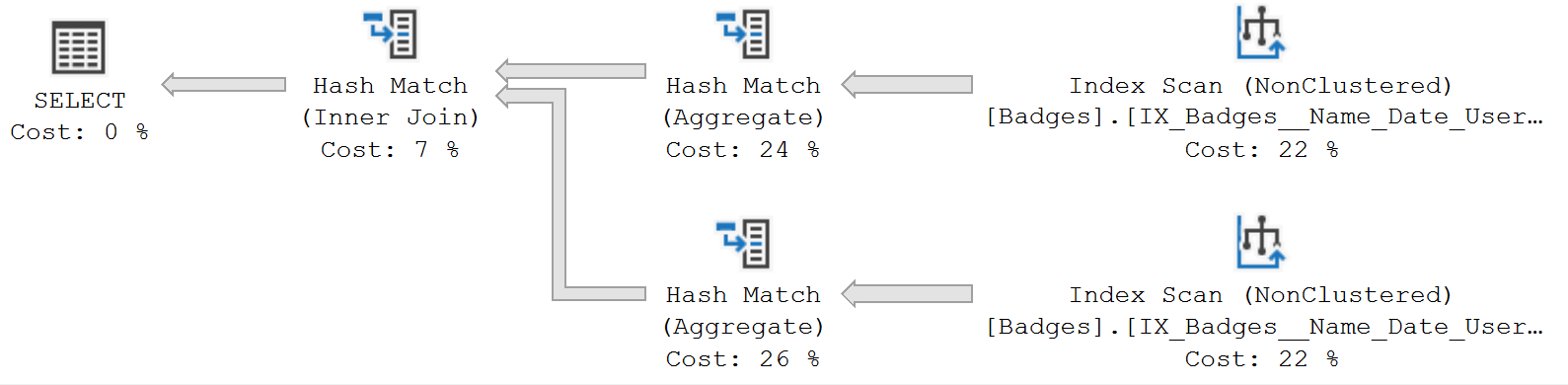

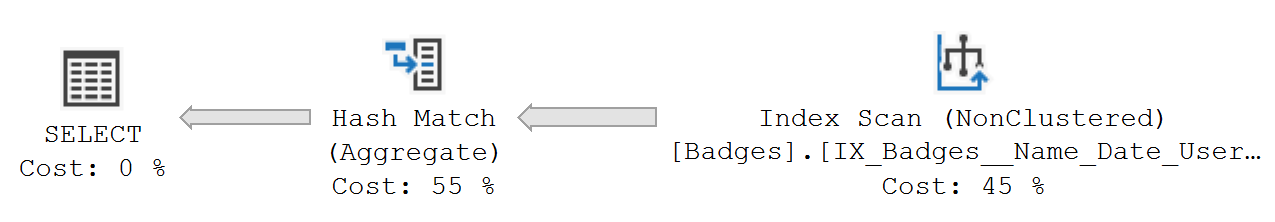

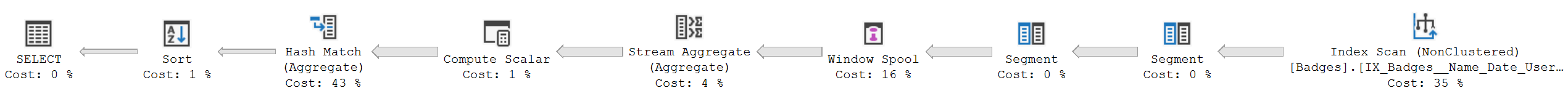

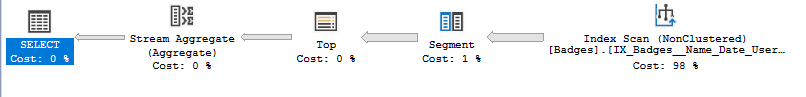

1. Window functions vs GROUP BY

Sometimes window functions rely a little too much on tempdb and blocking operators to accomplish what you ask of them. While using them is always my first choice because of their simple syntax, if they perform poorly you can usually rewrite them as an old-fashioned GROUP BY to achieve better performance.

2. Correlated subqueries vs derived tables

Many people like using correlated subqueries because the logic is often easy to understand, however switching to derived table queries often produces better performance due to their set-based nature.

3. IN vs UNION ALL

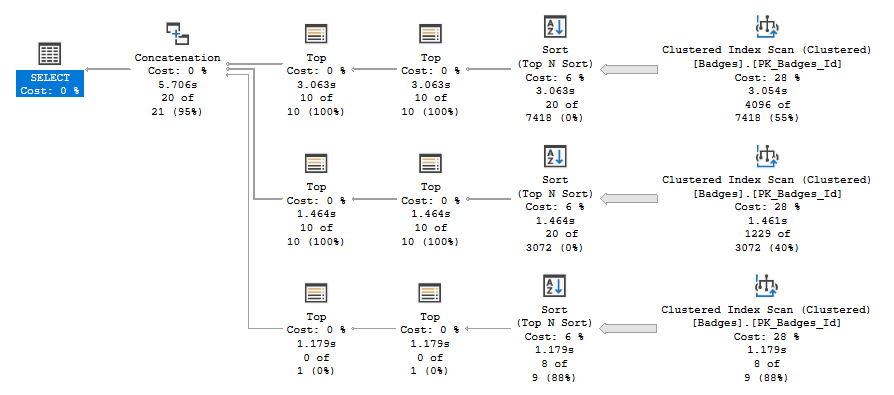

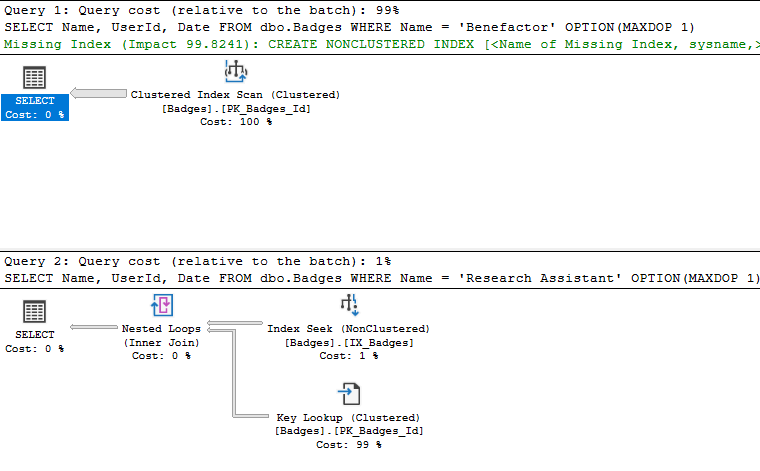

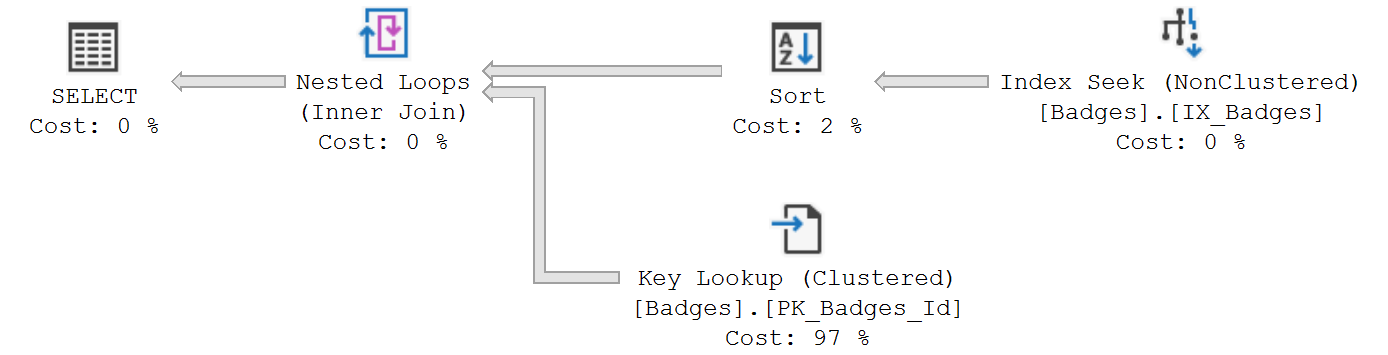

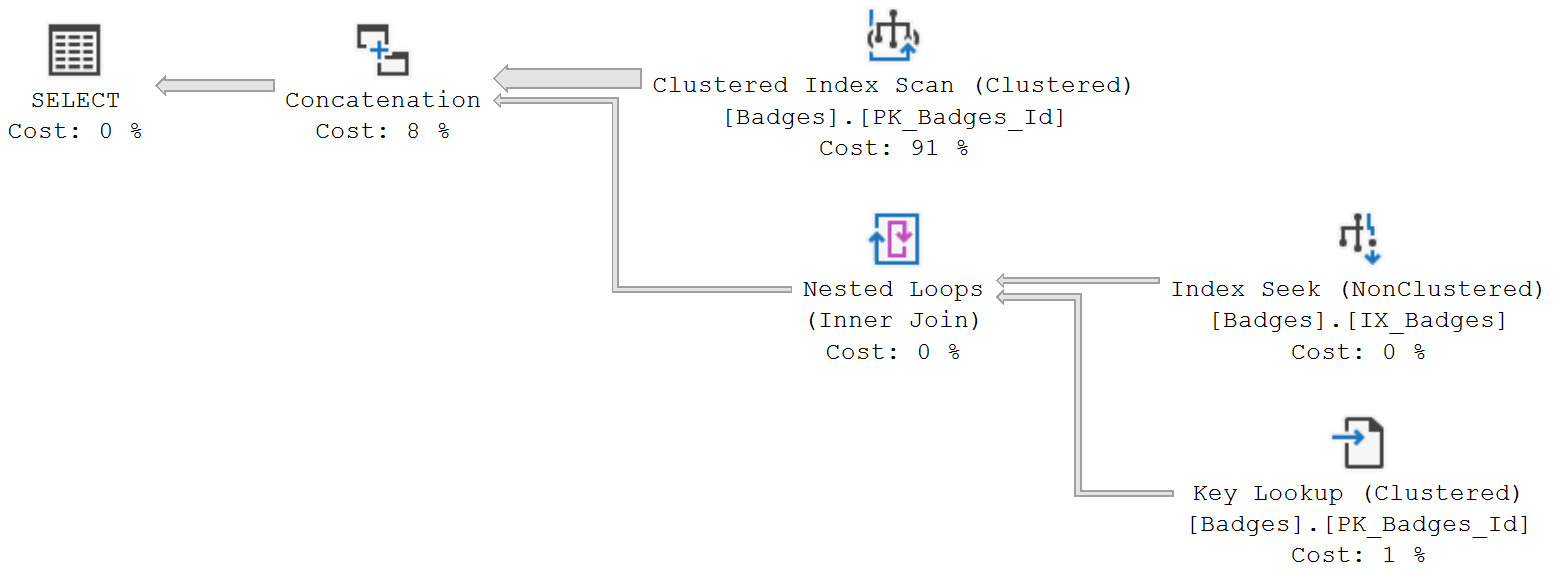

When filtering rows of data on multiple values in tables with skewed distributions and non-covering indexes, writing your logic into multiple statements joined with UNION ALLs can sometimes generate more efficient execution plans than just using IN or ORs.

4. Temporary Staging Tables

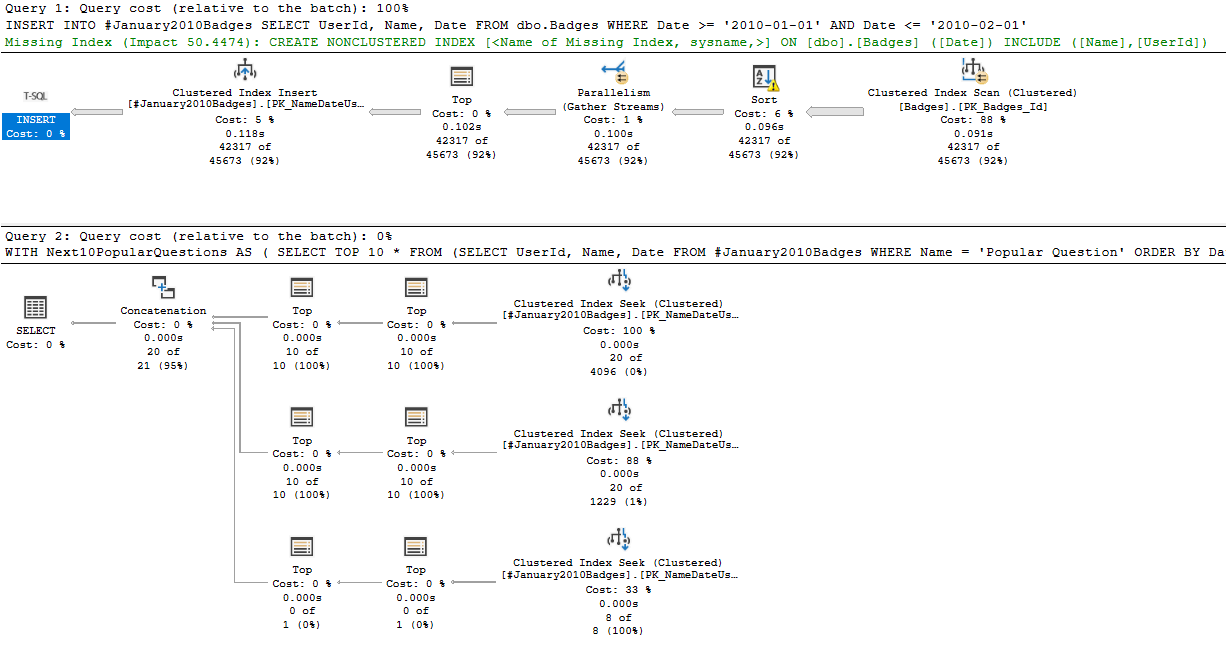

Sometimes the query optimizer struggles to generate an efficient execution plan for complex queries. Breaking a complex query into multiple steps that utilize temporary staging tables can provide SQL Server with more information about your data. They also cause you to write simpler queries which can cause the optimizer to generate more efficient execution plans as well as allow it to reuse result sets more easily.

5. Forcing Table Join Orders

Sometimes outdated statistics and other insufficient information can cause the SQL Server query optimizer to join tables in a less than ideal sequence. Adam Machanic has a fantastic presentation on forcing table join order with blocking operators without having to resort to join hints.

6. DISTINCT with few unique values

Using the DISTINCT operator is not always the fastest way to return the unique values in a dataset. In particular, Paul White uses recursive CTEs to return distinct values on large datasets with relatively few unique values. This is a great example of solving a problem using a very creative solution.

7. Eliminate UDFs

UDFs often cause poor query performance due to forcing serial plans and causing inaccurate estimates. One way to possibly improve the performance of queries that call UDFs is to try and inline the UDF logic directly into the main query. With SQL Server 2019 this will be something that happens automatically in a lot of cases, but as Brent Ozar points out you might occasionally have to manually inline a UDF's functionality to get the best performance.

8. Create UDFs

Sometimes a poorly configured server will parallelize queries too frequently and cause poorer performance than their serially equivalent plan. In those cases, putting the troublesome query logic into a scalar or multi-statement table-valued function might improve performance since they will force that part of the plan to run serially. Definitely not a best practice, but it is one way to force serial plans when you can't change the cost threshold for parallelism.

9. Data Compression

Not only does data compression save space, but on certain workloads it can actually improve performance. Since compressed data can be stored in fewer pages, read disk speeds are improved, but maybe more importantly the compressed data allows more to be stored in SQL Server's buffer pool, increasing the potential for SQL Server to reuse data already in memory.

10. Indexed Views

When you can't add new indexes to existing tables, you might be able to get away with creating a view on those tables and indexing the view instead. This works great for vendor databases where you can't touch any of the existing objects.

11. Switch cardinality estimators

The newer cardinality estimator introduced in SQL Server 2014 improves the performance of many queries. However, in some specific cases it can make queries perform more slowly. In those cases, a simple query hint is all you need to force SQL Server to change back to the legacy cardinality estimator.

12. Copy the data

If you can't get better performance by rewriting a query, you can always copy the data you need to a new table in a location where you CAN create indexes and do whatever other helpful transformations you need to do ahead of time.

...And more

By no means is this list exhaustive. There are so many ways to rewrite queries, and not all of them will work all the time.

The key is to think about what the query optimizer knows about your data and why it's choosing the plan it is. Once you understand what it's doing, you can start getting creative with various query rewrites that address that issue.