Watch this week's video on YouTube

Recursive queries are fun to plan and write. They can be frustrating too depending on the complexity of the problem you are trying to solve.

This post shows one solution for finding all records that are related, either directly or via intermediate records, using recursive queries in SQL Server.

The Data

Here's the data we'll be working with:

DROP TABLE IF EXISTS #relationships;

CREATE TABLE #relationships (

Id int,

FK1 varchar(10),

FK2 varchar(10)

);

INSERT INTO #relationships

VALUES

/* This group of records are all related, directly or through intermediate nodes */

(1,'A','B'),

(2,'A','E'),

(3,'A','G'),

(4,'A','F'),

(5,'B','F'),

(6,'B','E'),

(7,'E','F'),

(8,'G','H'),

(9,'B','A'), /* This is an identical relationship as Id=1 */

(10,'B','F'), /* This is a straight up duplicate record to Id=5*/

/* These records are related simply where FK2 of one row is FK1 of the subsequent row */

(11,'I','J'),

(12,'J','K'),

(13,'K','L'),

/* Some more related records */

(14,'M','N'),

(15,'O','M'),

(16,'P','O'),

(17,'M','N'), /* Duplicate of Id=14 */

(18,'M','O'), /* Flipped duplicate of 15 */

(19,'M','P'),

/* These records are interesting because the FK2 values never appear in the FK1 column */

(20,'Q','R'),

(21,'S','R'),

(22,'T','R');

Each row has values for Id,FK1,and FK2 columns. Id is a unique primary key that I included to make referencing individual rows easier. FK1 and FK2 are foreign keys that reference full records in another table. The point is that this table shows the relationships between records.

I used blank lines to visually indicate a group of records in the data above. The end result I want to achieve is to have each group of related records to share the same group_id. I also added some comments to point out some interesting scenarios in the data.

I programmed in some of the data edge cases I knew I would encounter into the test data; a sort of poor-man's test driven development. Including these known edge cases helps me test my query so I know my final solution will handle them correctly. If you are applying this solution to your own data, make sure to add your own test cases into the data.

Query Transformations for Sorted Hashes

The first recursive query I wrote to solve this problem was ugly. I was creating sorted hashes to ensure that rows where the FK values were swapped were deduplicated (eg. I would want to dedupe where Id=1 and Id=9). This solution involved joining on CASE statements like this all throughout the set of queries: CASE WHEN FK1<=FK2 THEN '|'+FK1+','+FK2+'|' ELSE '|'+FK2+','+FK1+'|' END AS key_hash.

Yuck. The solution worked, but I know my future self would not want to maintain that type of code.

Rather than have the final query do all of the work, I decided to clean up the data first instead.

Initial Clean Up

I decided to transform the data to eliminate all duplicates and make sure my keys were always sorted so the value of FK1 < FK2. This allows for a simpler final query:

--Remove duplicates where keys are switched

DROP TABLE IF EXISTS #deduped;

CREATE TABLE #deduped (

Id int IDENTITY(1,1),

FK1 varchar(10),

FK2 varchar(10),

key_hash varchar(100)

);

INSERT INTO #deduped (FK1,FK2,key_hash)

SELECT

dupes.FK1,

dupes.FK2,

dupes.key_hash

FROM

(

SELECT

hashes.*,

ROW_NUMBER() OVER (PARTITION BY key_hash ORDER BY FK1) AS RN

FROM

(

/* make sure FK1 is always smaller than FK2. This eliminantes a lot of more complicated logic later on */

SELECT

CASE WHEN FK1 <= FK2 THEN FK1 ELSE FK2 END AS FK1,

CASE WHEN FK1 <= FK2 THEN FK2 ELSE FK1 END AS FK2,

CASE WHEN FK1 <= FK2 THEN '|'+FK1 +','+FK2+'|' ELSE '|'+FK2+','+FK1+'|' END AS key_hash

FROM

#relationships

) hashes

) dupes

WHERE

dupes.RN = 1;

This still uses the key_hash CASE statement I mentioned previously, but it only creates it once initially to dedupe the entries regardless of their order.

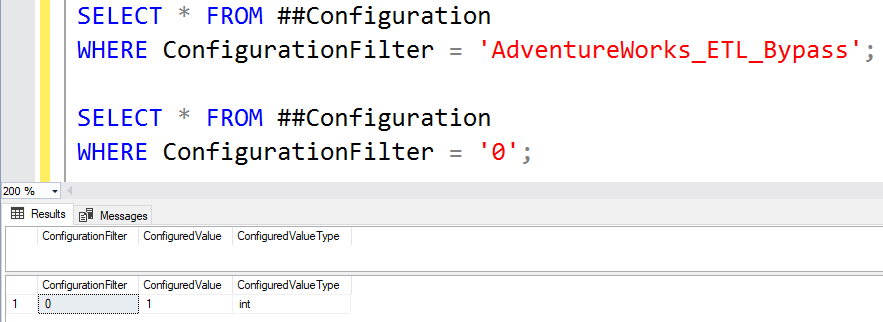

Grouping Related Records

With a deduped dataset, the recursive query for finding groups of related records is (relatively) straight-forward (and a refresher if you need to remember how recursive CTEs work in SQL Server):

WITH c AS (

/* The initial query */

SELECT

1 as level,

DENSE_RANK() OVER(ORDER BY FK1) group_id,

key_hash,

FK1

,FK2

FROM

#deduped

UNION ALL

/* The recursive query that runs once for each of the above query's output and then once on every row from each subsequent result */

SELECT

c.level+1,

c.group_id,

t.key_hash,

t.FK1,

t.FK2

FROM

c

INNER JOIN #deduped t

ON c.FK2 = t.FK1

WHERE

/* don't include combinations already matched */

c.key_hash not like '%'+t.key_hash+'%'

),

/* regular CTE */

output_with_dupes as (

SELECT

level,

key_hash,

group_id,

FK1,

FK2,

ROW_NUMBER() OVER (PARTITION BY key_hash ORDER BY group_id) AS RN

FROM

c

)

-- deduped output

SELECT

group_id,

FK1,

FK2

FROM

output_with_dupes

WHERE RN = 1

ORDER BY

group_id,

FK1

OPTION(MAXRECURSION 0)

Some records are duplicated after the initial join, so a subsequent expression is used to dedupe the final records. The result is a table of rows containing a group_id of records that are related to each other.

Couldn't you have done this with hierarchyid?

But I wanted a solution flexible enough to reuse for other relational databases that don't support hierarchyid as well.

More than just recursive queries

If you've never written a recursive SQL query before, hopefully this post gives you an idea of how to do it.

The more important takeaway is that sometimes it's easier to solve a data problem than a query problem. Like I said previously, my initial recursive query was hideous and unmaintainable because of the duplicates I had in my data. Rather than living with that query, it made sense to clean up the data first and then write the simpler query on the clean data.