A significant portion of Yellowstone National Park sits on top of a supervolcano. Although it's not likely to erupt any time soon, the park is constantly monitored for geological events like earthquakes.

A significant portion of Yellowstone National Park sits on top of a supervolcano. Although it's not likely to erupt any time soon, the park is constantly monitored for geological events like earthquakes.

This week I want to take a look at how you can import this earthquake data, encoded in GeoJSON format, into SQL Server in order to be able to analyze it using SQL Server's spatial functions.

Watch this week's video on YouTube

GeoJSON

The source for the data we'll be using is the 30-day earthquake feed from the USGS. This data is encoded in the GeoJSON format, a specification that makes it easy to share spatial data via JSON. To get an idea of how it looks, here's an extract:

{

"type": "FeatureCollection",

"metadata": {

"generated": 1515603955000,

"url": "https://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/1.0_month.geojson",

"title": "USGS Magnitude 1.0+ Earthquakes, Past Month",

...

},

"features": [{

"type": "Feature",

"properties": {

"mag": 1.25,

"place": "7km NW of The Geysers, CA",

"time": 1515603166510,

...

},

"geometry": {

"type": "Point",

"coordinates": [-122.8221664, 38.8175011, 2.02]

},

"id": "nc72952110"

},

...

],

"bbox": [-179.921, -62.519, -3.35, 179.9551, 85.3951, 608.58]

}

The key thing we'll be examining in this data is the "features" array: it contains one feature object for each earthquake that's been recorded in the past 30 days. You can see the "geometry" child object contains lat/long coordinates that we'll be importing into SQL Server.

If you want the same 30-day GeoJSON extract we'll be using in all of the following demo code, you can download it here.

Importing GeoJSON into SQL Server

There's no out of the box way to import GeoJSON data into SQL Server.

However, using SQL Server's JSON functions we can build our own solution pretty easily.

First, let's create a table where we can store all of earthquake data:

CREATE TABLE dbo.EarthquakeData

(

Id int IDENTITY PRIMARY KEY,

EventDate DATETIME2,

Magnitude FLOAT,

Place VARCHAR(300),

Coordinates GEOGRAPHY,

Long varchar(100),

Lat varchar(100)

)

CREATE SPATIAL INDEX IX_Coordinates ON dbo.EarthquakeData (Coordinates)

Then, let's use the OPENJSON() function to parse our JSON and insert it into our table:

DECLARE @GeoJSON nvarchar(max) = N'{...}' -- Put the GeoJSON here

INSERT INTO dbo.EarthquakeData (EventDate,Magnitude,Place,Coordinates,Long,Lat)

SELECT

-- Convert unix epoch time to datetime

-- We also know the source is in UTC, so we specify that using AT TIME ZONE for easier conversions down the road

DATEADD(second,cast(UnixMillisecondsSinceEpoch/1000 as int),'1970-01-01 00:00:00') AT TIME ZONE 'UTC' AS EventDate,

Magnitude,

Place,

-- Build our geography datatype

geography::STPointFromText('POINT ('+Long + ' ' + Lat + ')', 4326) AS Geography,

Long,

Lat

FROM

OPENJSON(@GeoJSON, '$.features')

WITH (

UnixMillisecondsSinceEpoch bigint '$.properties.time',

Magnitude float '$.properties.mag',

Place varchar(300) '$.properties.place',

Long varchar(100) '$.geometry.coordinates[0]',

Lat varchar(100) '$.geometry.coordinates[1]'

)

We use OPENJSON() to parse our JSON hierarchy and then concatenate together the lat and long values into our well known text format to be able to use it with SQL Server's spatial function STPointFromText:

SELECT geography::STPointFromText('POINT ('+Long + ' ' + Lat + ')', 4326) AS Geography

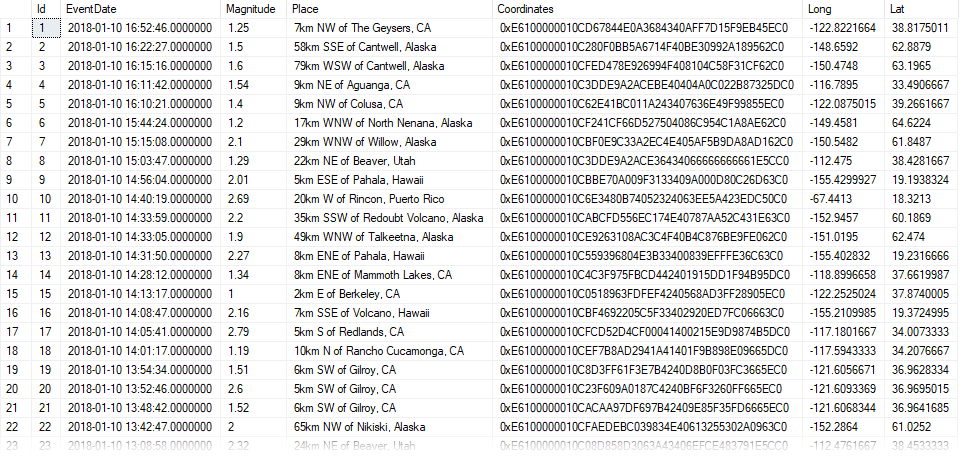

What results is our earthquake data all nicely parsed out into our dbo.EarthquakeData table:

What about Yellowstone?

The above data includes earthquakes from around world. Since we only want to examine earthquakes in Yellowstone, we'll need to filter the data out.

There's a handy Place column in the data that we could probably add a LIKE '%yellowstone%' filter to - but this is a post about spatial data in SQL, we can do better!

The Wyoming State Geological Survey website has Shapefiles for the boundary of Yellowstone National Park. Since we are practicing our GeoJSON import skills, I converted the Shapefiles to GeoJSON using an online converter and the resulting data looks like this:

{

"type": "FeatureCollection",

"bbox": [-111.15354785438899, 44.13238494057162, -109.816111731858, 45.102865336505396],

"features": [{

"type": "Feature",

"geometry": {

"type": "Polygon",

"coordinates": [

[

[-111.0970801722779, 44.487322588834374],

[-111.09707931336956, 44.48929779632266],

[-111.09707877845554, 44.49109904485493],

[-111.09707888353985, 44.49472122457225],.

...

]

]

},

...

}]

}

You can download the full park boundary GeoJSON file here.

Just like before, we'll use SQL Server's OPENJSON() function to parse our GeoJSON data into a well-known text POLYGON.

First we create our table:

DROP TABLE IF EXISTS dbo.ParkBoundaries

CREATE TABLE dbo.ParkBoundaries

(

Id int IDENTITY PRIMARY KEY,

ParkName varchar(100),

ParkBoundary GEOGRAPHY

)

CREATE SPATIAL INDEX IX_Boundary ON dbo.ParkBoundaries (ParkBoundary)

And then populate it, this time using the STPolyFromText spatial function:

INSERT INTO dbo.ParkBoundaries (ParkName, ParkBoundary)

SELECT

'Yellowstone National Park' AS ParkName,

geography::STPolyFromText('POLYGON ((' + STRING_AGG(CAST(Long + ' ' + Lat as varchar(max)), ',') + '))',4326).ReorientObject() AS ParkBoundary

FROM

(

SELECT

Long,

Lat

FROM

OPENJSON(@Yellowstone, '$.features[0].geometry.coordinates[0]')

WITH

(

Long varchar(100) '$[0]',

Lat varchar(100) '$[1]'

)

)d

Filtering our data

Now we have two tables: dbo.EarthquakeData and dbo.ParkBoundaries. What we want to do is select only the Earthquake data points that fall within the boundaries of Yellowstone National Park.

This is easy to do using the STIntersects spatial function, which returns a "1" for any rows where one geography instance (our lat/long earthquake coordinate) intersects another geography instance (our park boundary):

SELECT

d.Id,

d.Magnitude,

d.EventDate,

d.Place,

b.ParkName

FROM

dbo.EarthQuakeData d

CROSS JOIN dbo.ParkBoundaries b

WHERE

Coordinates.STIntersects(ParkBoundary) =1

AND b.ParkName = 'Yellowstone National Park'

ORDER BY

Magnitude DESC

The rest is up to you

So all it takes to import GeoJSON data into SQL Server is knowing how to use SQL Server's JSON functions.

Once geographical data is imported into geography data types, SQL Server's spatial functions offer lots of flexibility for how to efficiently slice and dice the data.

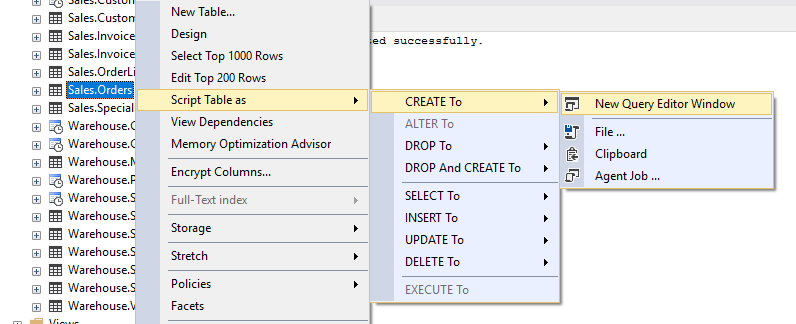

An inefficient way of generating change scripts

An inefficient way of generating change scripts

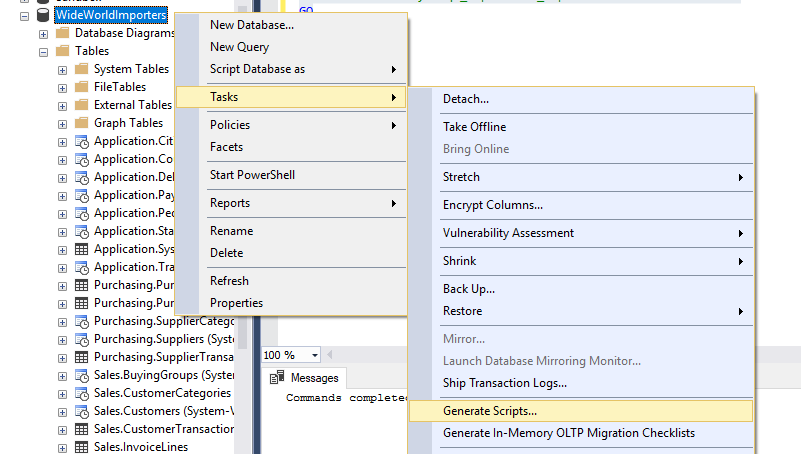

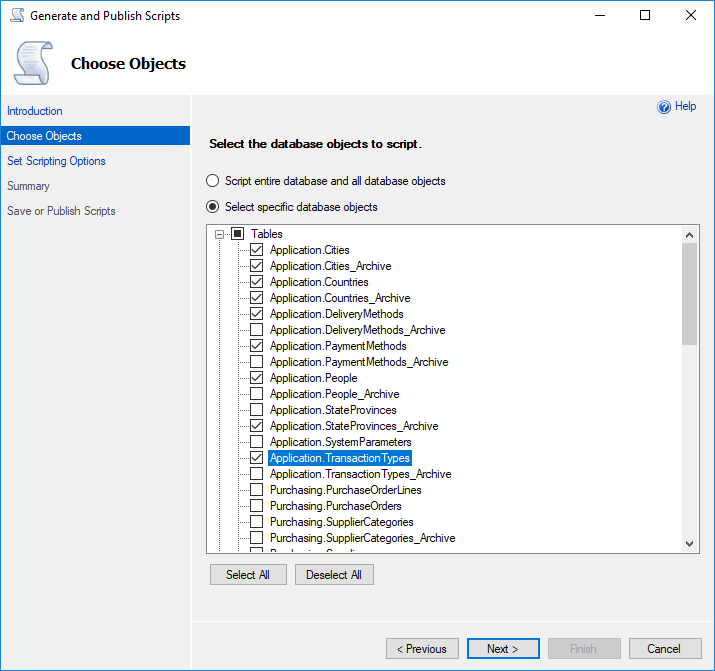

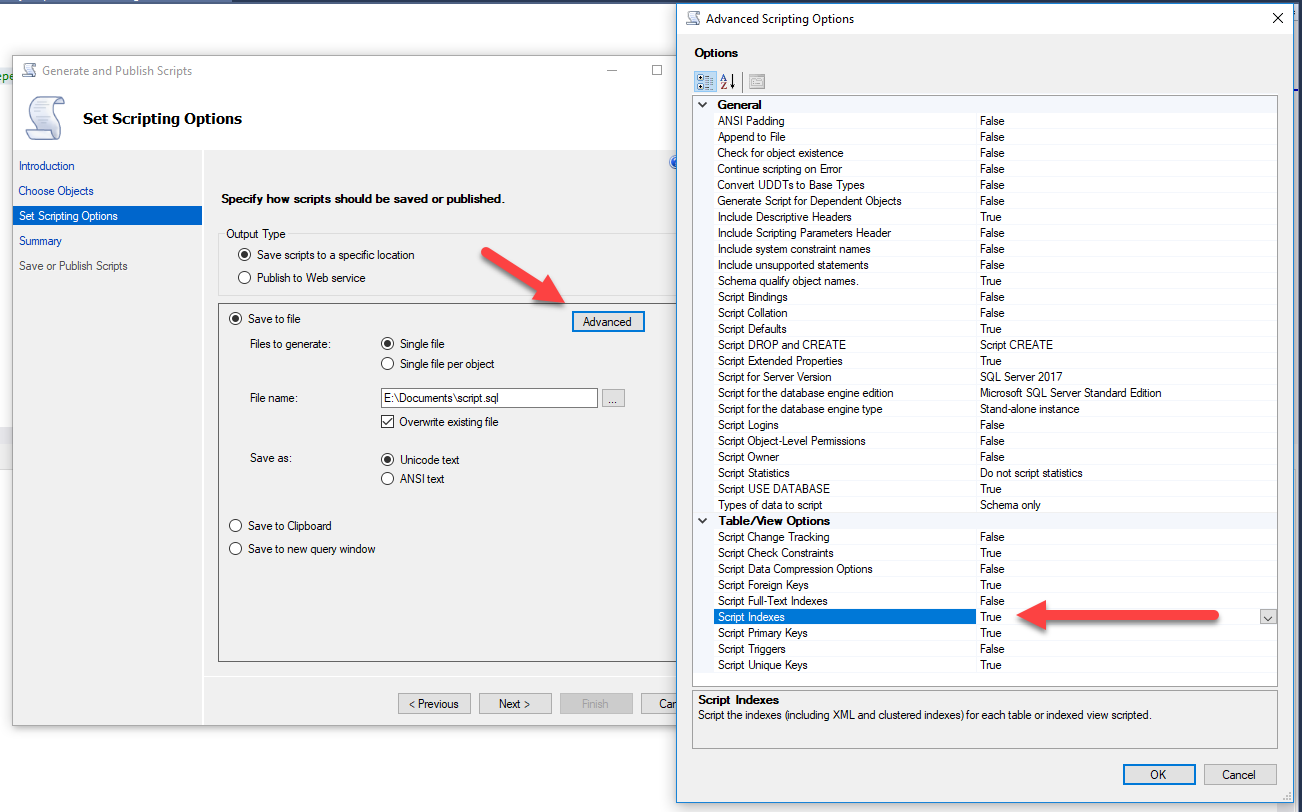

Look ma, multiple objects at once!

Look ma, multiple objects at once! Ooooooo, ahhhh - everything scripted in one fell swoop!

Ooooooo, ahhhh - everything scripted in one fell swoop!