This post is a response to this month's T-SQL Tuesday #105 prompt by Wayne Sheffield. T-SQL Tuesday is a way for the SQL Server community to share ideas about different database and professional topics every month.

This post is a response to this month's T-SQL Tuesday #105 prompt by Wayne Sheffield. T-SQL Tuesday is a way for the SQL Server community to share ideas about different database and professional topics every month.

This month's topic asks to share a time you ran into a metaphorical brick wall and how you worked it out.

Watch this week's video on YouTube

The Problem: Trimmed JSON Values

Recently I was using FOR JSON PATH to generate a JSON string from a query to use in a web app:

SELECT TOP 100 *

FROM master..spt_values

WHERE type='P'

FOR JSON PATH

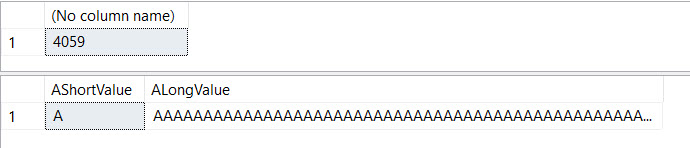

The resulting JSON string is 5,580 characters long.

The goal was to read this query result into my .NET app like so:

var jsonResult = db.Database.SqlQuery<string>("SELECT TOP 100 * FROM ... FOR JSON PATH");

Is this the best way to design every app/database interaction? Probably not, but it was right for this scenario because I didn't want to create models for all of the dynamic data I'd be returning.

Design decisions aside, my "brick wall" issue was that my "jsonResult" value was getting truncated around 2,000 characters instead of displaying the full 5,580. The JSON string looked great in SSMS, but for the life of me I couldn't figure out why the data was getting chopped off when read into .NET.

Time to Debug

What follows are the usual steps I take when debugging a problem that has me stumped. I've turned these into a 1950s style educational film so you can laugh at my bad jokes while your learn.

1. Rubber Duck Debugging

The first thing I usually do when I hit a wall like this is talk myself through the problem again.

This technique usually works well for me and is equivalent to those times when you ask someone for help but realize the solution while explaining the problem to them.

To save yourself embarrassment (and to let your coworkers keep working uninterrupted), people often substitute an inanimate object, like a rubber duck, instead of a coworker to try and work out the problem on their own.

Alas, in this case explaining the problem to myself didn't help, so I moved on to the next technique.

2. Simplify the Problem

Breaking a problem down into smaller solvable parts can help sometimes. I changed my query to return less data by switching to SELECT TOP 5 and seeing if .NET was still truncating the data. It wasn't! Mildly successful!

In this case though, I couldn't really build off my simplified example. As soon as my result passed ~2,000 characters, the JSON string was getting chopped off.

In this step I also figured out if I put my query into a derived table, my .NET code worked beautifully and returned the complete JSON string:

SELECT * FROM

(

SELECT TOP 100 *

FROM master..spt_values

WHERE type='P'

FOR JSON PATH

) t(c)

This was an ugly solution though and I didn't like doing it. I especially didn't like it because I didn't know why a derived table fixed the output.

3. Check the Internet

As great as the internet is, I try to limit myself to how much searching I do on it when troubleshooting. Searching for an online solution can quickly devolve into wasting two hours with nothing to show.

I performed some cursory searches on Google, StackOverflow, various forums, blogs, etc... but didn't find anything helpful (fun/sad fact: I searched for solutions again while typing up this post and now find plenty of solutions...who knows what I was doing wrong that day).

4. Ask a Friend

I love working through problems with others because I'm often amazed at how differently others approach a problem. Often times this leads to a solution I would not have thought of on my own. I especially enjoy hearing from people new to the subject area because they often have the most creative solutions due to not yet having become cynical and jaded from previous experience :).

I try to hold off on this option until at least trying all of the above techniques because 1) I hate breaking another person's concentration 2) I feel like I learn better if I struggle through a problem myself.

And in this case shopping the problem around didn't help - no one I talked to had a great solution.

5. Take a Break

After trying all of the above, I was out of ideas. I took a break from the problem for the rest of the day, resolved to give it another try in the morning the morning.

6. RTFM

And the next morning, I had the idea to check the documentation to see what it said about the return type of FOR JSON PATH.

Embarrassingly, this one should be way higher on the list, and I'd like to say that it usually is, but for one reason or another I didn't bother checking until this late in the game.

The documentation tells me that the JSON string will be broken up across multiple rows and my client app needs to concatenate them all together. What I ended up doing is a simple String.Join():

var jsonResult = String.Join("",db.Database.SqlQuery<string>("SELECT TOP 100 * FROM ... FOR JSON PATH"));

There's no explanation for why SSMS is able to concatenate these values together but other client apps have to manually do so, but at least I found my documented solution.

Conclusion

Even though I found a somewhat-satisfactory solution in the documentation, my fall back was going to be to use the ugly derived table solution discovered in step 2. It was ugly, but at some point I would have to call it quits and settle with an ugly workaround rather than spend more time on troubleshooting.

Next time I'll be sure to check the documentation earlier in the process and hopefully that will save me from some of the frustration I encountered in this particular scenario.

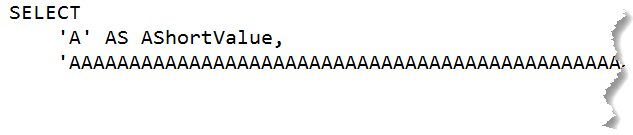

A homage to one of my favorite

A homage to one of my favorite  Need a ultra HD wide display to fit this all on one screen.

Need a ultra HD wide display to fit this all on one screen.

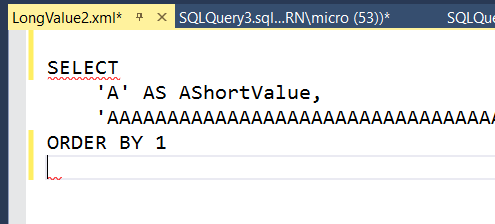

IntelliSense complains but I'm OK with it

IntelliSense complains but I'm OK with it

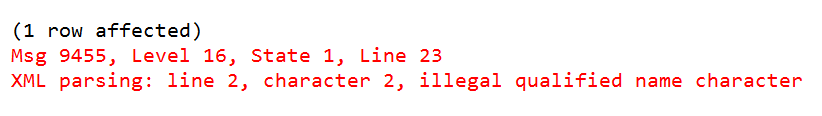

FOR XML PATH is one of the most abused SQL Server functions.

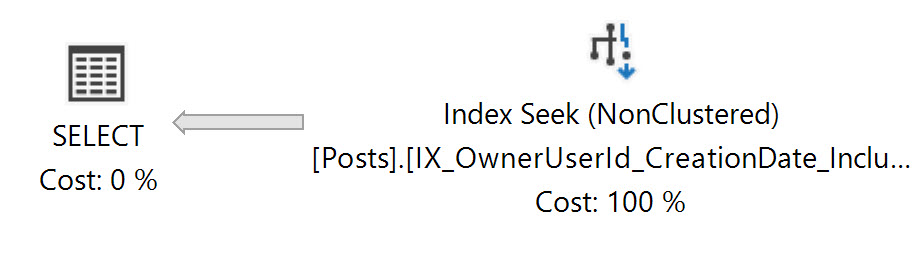

FOR XML PATH is one of the most abused SQL Server functions. I wish all of my execution plans were this simple.

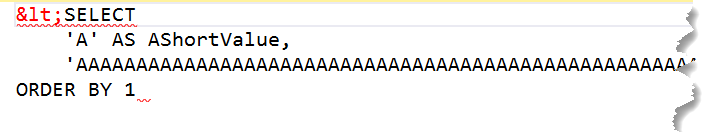

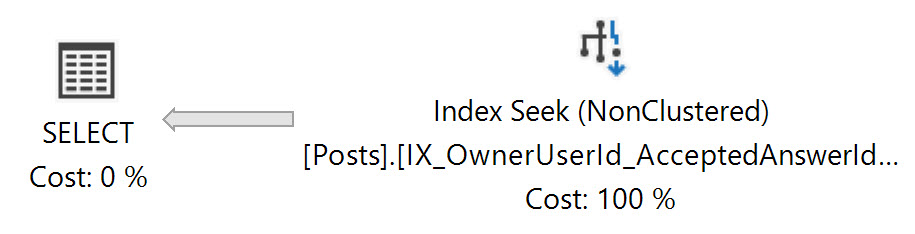

I wish all of my execution plans were this simple. This execution plan looks the same, but you'll notice the smaller, more data dense index is being used.

This execution plan looks the same, but you'll notice the smaller, more data dense index is being used.