Photo by Patrick Tomasso on Unsplash

Photo by Patrick Tomasso on Unsplash

How many times have you known that adding an index would improve query performance but you weren't exactly sure which type of index to add?

This happened to me all the time in my first few years (and maybe an extra year or two after that) of working with SQL Server.

Today I want to help alleviate some of that confusion by comparing two of the most common index types: clustered and nonclustered rowstore indexes.

Watch this week's video on YouTube

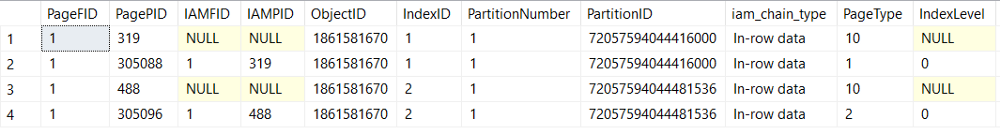

Clustered Indexes

Every table's data has some natural order to it.

If the order is random and not explicitly defined then that table is known as a heap. With the exception of a few special cases, we generally don't want to have heaps. Heaps don't perform well for the majority of queries becauase SQL Server has no meta knowledge about where data is stored within a heap.

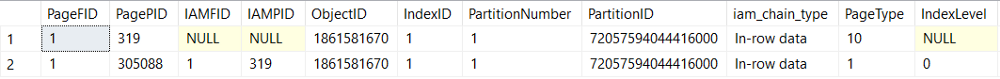

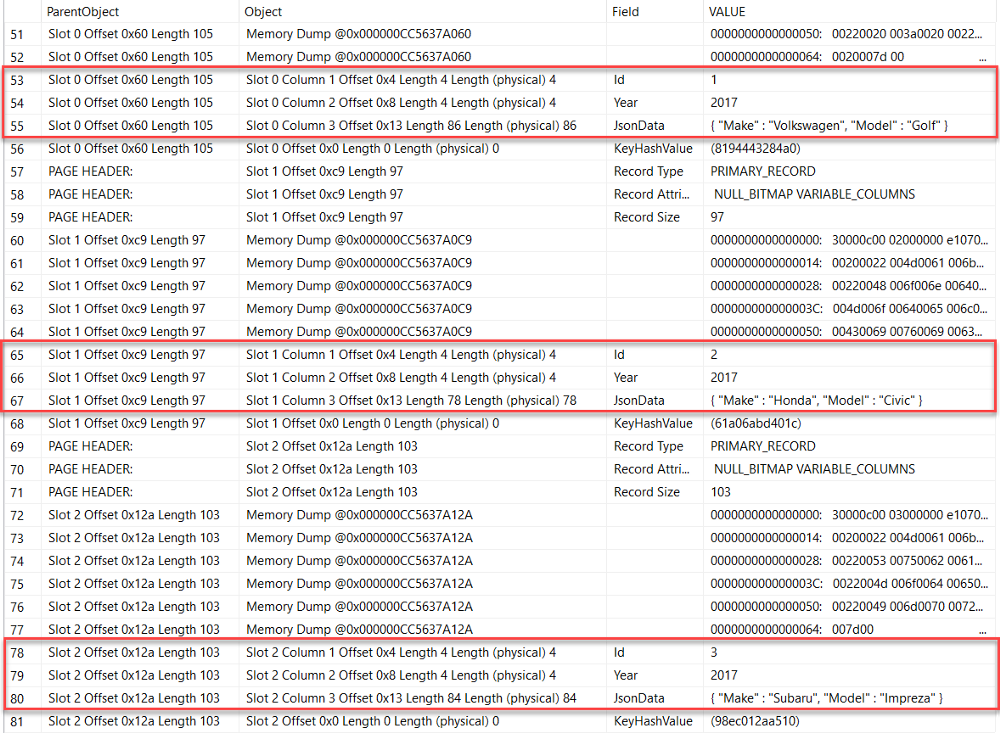

If we don't have a random heap, that means we have defined the order that data should be stored in a table. ** The physical storage order of our data is defined by our clustered index.**

Every table can have exactly one clustered index because the data in a table can only be stored in one order i.e. you can't have that table's data physically stored on the disk in more than one order.

What are the benefits of a clustered index?

The data in a clustered index is stored in order. That means:

- Finding the data you need in your clustered index is a matter of knowing where to look in our alphabetical list of data. Computers are really good at doing this.

- If your data needs to be outputted in the same order that it's stored in - presto! - SQL doesn't need to do any additional sorting.

A lot of people like to put the clustered index on their table's primary key (PK). This is usually fine because a lot of the time our primary key is likely to be our most used field for joins, where statements, etc...

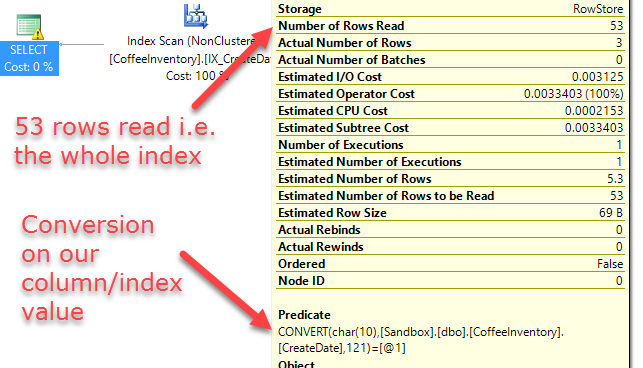

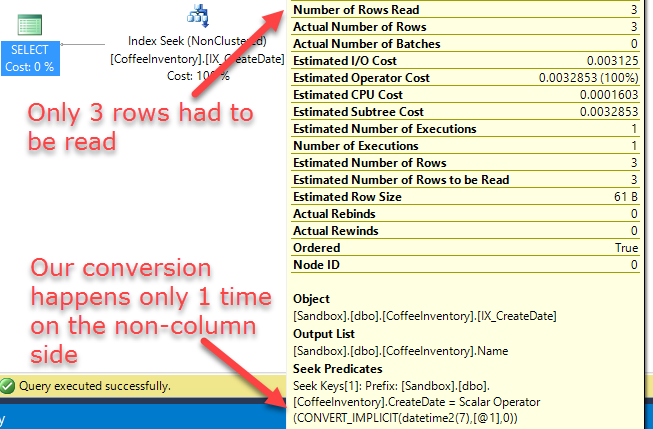

Some people think they can ONLY put their clustered index on their PK. That's not true! Often times it can be much more beneficial to put your clustered index on something that isn't your PK, like a different column that is getting more use than our PK. For example, you might have an identity column set as your PK, but every query against your table is filtering and sorting on a datetime2 column. Why store your table in PK order if you are always going to be filtering and returning data on that datetime2 column? Put that clustered index on the datetime2 column!

The downside to having data stored in this order is that actions like inserts and updates take long because SQL has to put them into the correct sorted order of the table pages - it can't just quickly tack them onto the end.

Another major benefit of a clustered index is that we don't have to "include" any additional data in our index. All of the data for our row exists right beside our indexed columns. This is not necessarily true of other index types (see nonclustered indexes below).

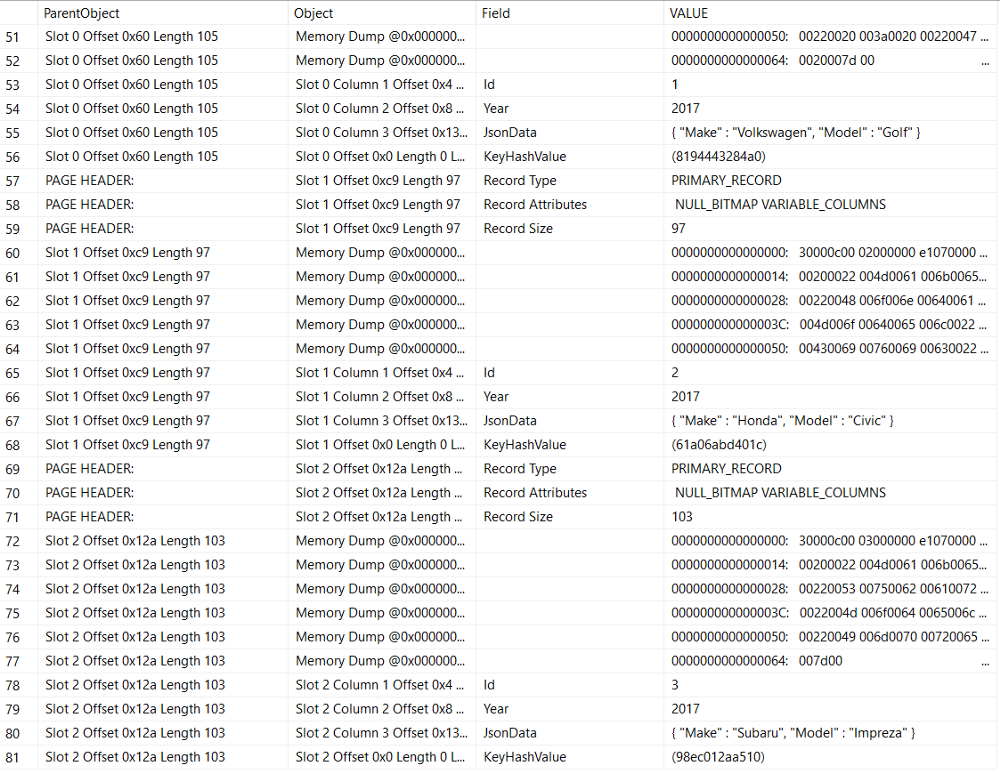

Pretend our clustered index is like the white pages of a phone book (note to future SQL developers in 2030 who have no idea what a phonebook is: it's something that stores the names, addresses, and landline phone numbers in your area. What's a landline? Oh boy...)

The phone book stores every person's name in alphabetical order, making it easy to look up certain individuals. Additionally, if we look someone up, we immediately have their address and phone number right their next to their name - no additional searching necessary!

This is a great feature of clustered indexes - if you ever need to retrieve many or all columns from your table, a clustered index will usually be efficient because once it finds the indexed value you are searching on, it doesn't need to go anywhere else to get the remaining data from that row.

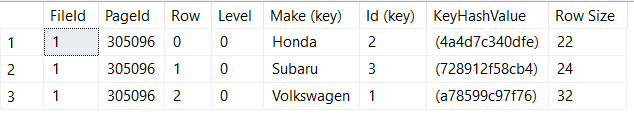

Nonclustered Indexes

If a clustered index is like a phone book, a nonclustered index is like the index in the back of a chemistry text book. The chemistry text book has some natural order to it ("Chapter 1: Matter, Chapter 2: Elements, Chapter 3: Compounds, etc..."). However, this order doesn't help us if we want to look up the location of something specific, like "noble gases".

So what do we do? We go to the index in the back of the textbook which lists all topics in alphabetical order, making it easy to find the listing for "noble gases" and the page number they are discussed on. Once we know the page number for noble gases from our index, we can flip to the correct page and get the data we need.

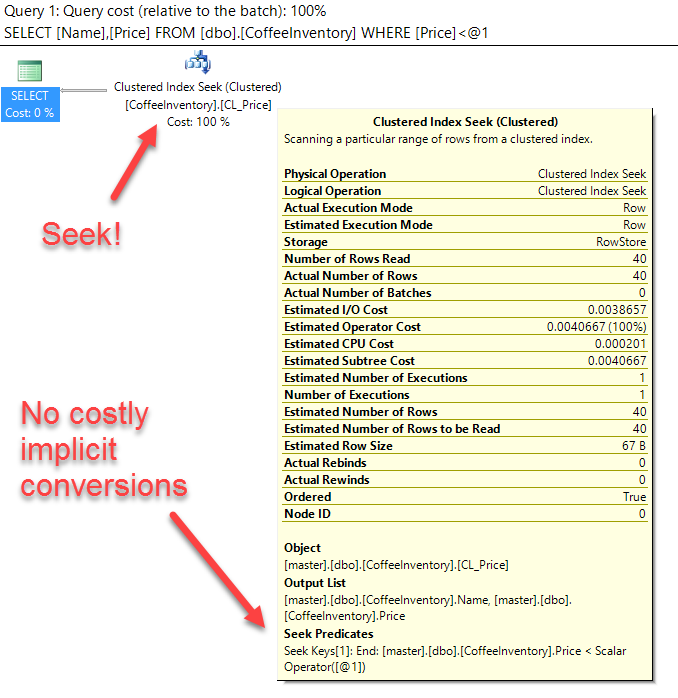

This book index represents our nonclustered index. A nonclustered index contains the ordered data for the columns specified in that index, with pointers (book page numbers) that tell us where to go to find the rest of the data from that row (flip to the right book page). That means unlike a clustered index where all data is always present, using a nonclustered index often is a two step process: find the value of interest in the index and then go look up the rest of that row's data from where it actually exists on disk.

What are the benefits of a nonclustered index?

We can have as many nonclustered indexes on our tables as we want (well, we max out at 999). That's great! Create an index for every column!

Well, no, don't do that. There's overhead in creating nonclustered indexes. Essentially, every time you index some column(s), you are duplicating the unique values in those column(s) so that they can be stored in sorted order in your index. We get speed and efficiency in our data lookups, but with the cost of losing disk space. You need to test and see for each table and set of queries what the optimal number of indexes is. Adding an additional index can absolutely destroy performance, so always test your changes!

Additionally, using a nonclustered index to find an indexed column's value is fast (SQL is just going through the ordered index data to find the value it needs - once again, something computers are really good at doing). However, if you need other columns of data from the row that you just looked up, SQL is going to have to use those index pointers to go find the rest of that row data somewhere else on disk. This can really add up and slow down performance.

If those additional lookups are hurting performance, what you can do is INCLUDE your nonindexed columns in your nonclustered index. What this basically does is in addition to storing the sorted values of your indexed column(s), the index will also store whatever additional values you want to include as part of the index itself. Once again, you'll probably get better performance because SQL won't have to go to somewhere else on disk to find the data it needs, but you lose storage space because you are creating duplicates of that data as part of your index.

Example Usage Scenarios

[Note: I want to clarify that the above definitions and below examples don't cover lots of corner cases (blob values, fragmentation, etc...). I wanted this post to be a simple starting point when people don't know what index type they should try adding first, because this was the paralysis that I had when starting out.]{style="color: #808080;"}

[Every statement in this article can probably have an asterisk appended to the end of it, pointing out some example where a recommendation I wrote is 100% wrong. ALWAYS test your index changes, because what might improve one query may hurt another one already running on that table, and over time you will learn about all of those edge cases and how they affect index performance.]{style="color: #808080;"}

Alright let's take a look at a few common scenarios and what the best index for them might be. After reading each scenario, take a guess about what kind of index you would add and then click on the answer to reveal what I would do in that scenario. Assume no indexes exist yet on these tables unless otherwise noted.

- You have OLTP data that's used only for transactional reads and writing new rows. You know the primary key is an identity integer column. What type of index would you create for the primary key?

Clustered index - Your queries are probably always going to be looking up by PK to return data. If you store the data in the table ordered by that PK, SQL will be able to do this very quickly. New row additions to the table will always get put at the end because of the auto-incrementing identity column, not creating any overhead for having to insert data in a specific location in the ordered data. - You have a query that wants to return most or all of the columns from a table. What type of index would make this the most efficient?

Clustered index - Since all of the column values are stored in the same location as your indexed fields, SQL won't have to go do any additional lookups to get all of the data you are requesting from it. If you created a nonclustered index you would have to INCLUDE all nonindexed columns, which would take up lots of space since you are essentially duplicating your entire table's data. - You have a table that is constantly having values updated. These updated values are used as in your JOINs and WHERE clauses. What type of index would you add? Nonclustered index - If our values are constantly changing, SQL only has to update the index and pointers while putting the actual data wherever it has available space on disk. Compare this to a clustered index where it has to put the inserted/updated data in the correct order, meaning potentially lots of operations to shift the data around if available free space doesn't exist at that location.

- You have a table that already has a clustered index, but it doesn't cover columns in JOINs and WHERE clauses. What do you do? Nonclustered index - since the clustered index already exists, your only option is to add a nonclustered index. Depending on the queries hitting this table however, you may want to consider changing your clustered index to a nonclustered index if you think your JOINs and WHERE clauses will be improved by having those fields be part of the clustered index. Test it out!

- You have a small staging table that you will always read all rows from and then truncate. You don't care about the order. Do you add an index? No, leave it as a heap - This is one scenario where not adding an index can give you better performance since there is no overhead in SQL having to store things in a sorted order or update indexes to specify the order. If you truly don't care about the order, and you will always be reading all rows from a table and then truncating the table, then it's better not to have the overhead of having indexes on the table.