Watch this week's video on YouTube

One of the things that the SQL Server query optimizer does is determine how to retrieve the data requested by your query.

Usually it does a pretty good job, which is a great because if it didn't then we'd be spending most of our days programming sorting and joining algorithms instead of having fun actually working with our data.

Sometimes the query optimizer has a lapse in judgement and createds a less-than-efficient plan, requiring us to step in and save the day.

Index Hints Give You Control

One way to "fix" a poor performing plan is to use an index hint. While we normally have no control over how SQL Server retrieves the data we requested, an index hint forces the query optimizer to use the index specified in the hint to retrieve the data (hence, it's really more of a "command" than a "hint").

Sometimes when I feel like I'm losing control I like using an index hint to show SQL Server who's boss. I occasionally will also use index hints when debugging poor performing queries because it allows me to confirm whether using an alternate index would improve performance without having to overhaul my code or change any other settings.

...But Sometimes That's Too Much Power

While I like using index hints for short-term debugging scenarios, that's about the only time they should be used because they can create some pretty undesirable outcomes.

For example, let's say I have this nice simple query and index here:

CREATE INDEX IX_OwnerUserId_CreationDate_Includes

ON dbo.Posts (OwnerUserId, CreationDate) INCLUDE (AcceptedAnswerId, ClosedDate, CommentCount, FavoriteCount, LastActivityDate);

SELECT

OwnerUserId,

AcceptedAnswerId

FROM

dbo.Posts

WHERE

OwnerUserId < 1000

This index was specifically created for a different query running on the Posts table, but it will also get used by the simple query above.

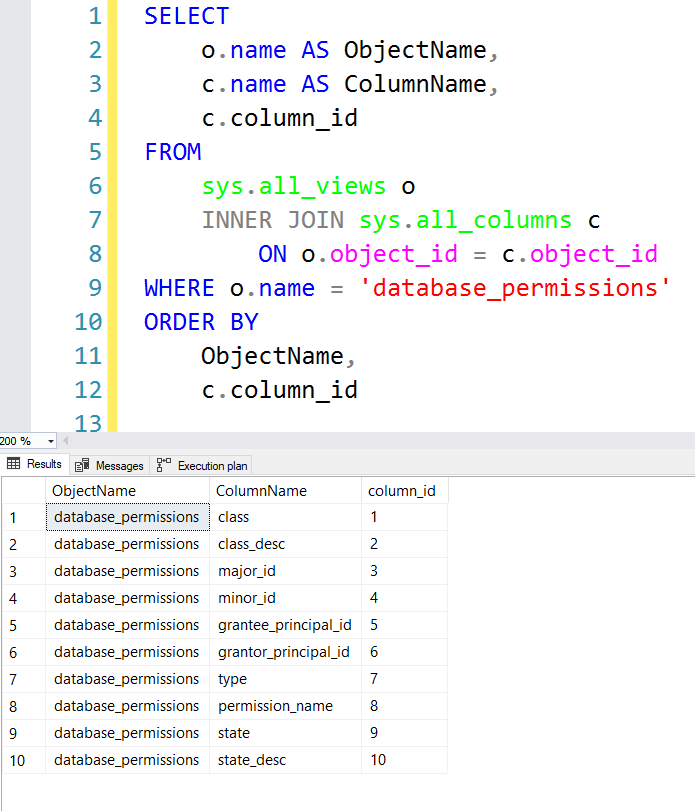

Executing this query without any hints causes SQL Server to use it anyway (since it's a pretty good index for the query), and we get decent performance: only 1002 logical reads.

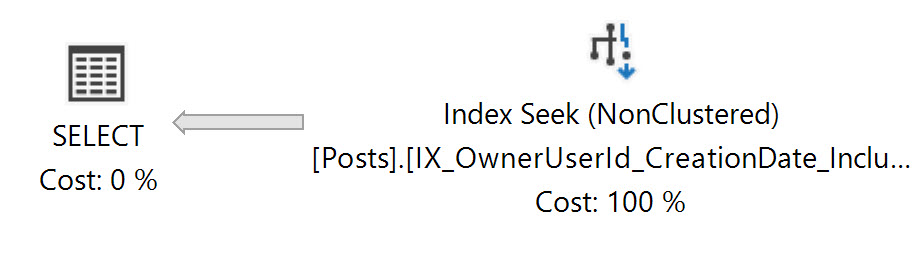

I wish all of my execution plans were this simple.

I wish all of my execution plans were this simple.

Let's pretend we don't trust the SQL Server optimizer to always choose this index, so instead we force it to use it by adding a hint:

SELECT

OwnerUserId,

AcceptedAnswerId

FROM

dbo.Posts WITH (INDEX(IX_OwnerUserId_CreationDate_Includes))

WHERE

OwnerUserId < 1000

With this hint, the index will perform exactly the same: 1002 logical reads, a good index seek, etc...

But what happens if in the future a better index gets added to the table?

CREATE INDEX IX_OwnerUserId_AcceptedAnswerId_Includes

ON dbo.Posts (OwnerUserId, AcceptedAnswerId) INCLUDE (LastEditorUserId, ParentId);

If we run the query WITHOUT the index hint, we'll see that SQL Server actually chooses this new index because it's smaller and we can get the data we need in only 522 logical reads:

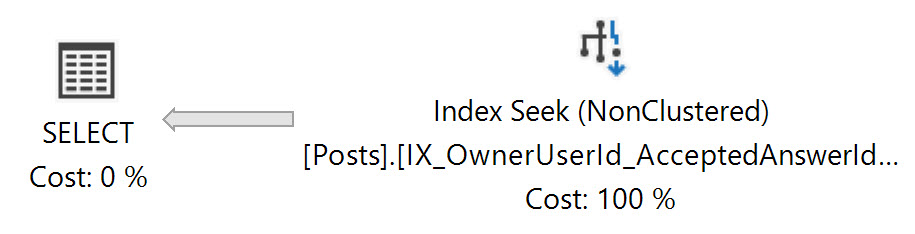

This execution plan looks the same, but you'll notice the smaller, more data dense index is being used.

This execution plan looks the same, but you'll notice the smaller, more data dense index is being used.

If we had let SQL Server do it's job, it would have given us a great performing query! Instead, we decided to intervene and hint (ie. force) it to use a sub-optimal index.

Things Can Get Worse

The above example is pretty benign - sure, without the hint SQL Server would have read about half as many pages, but this isn't a drastic difference in this scenario.

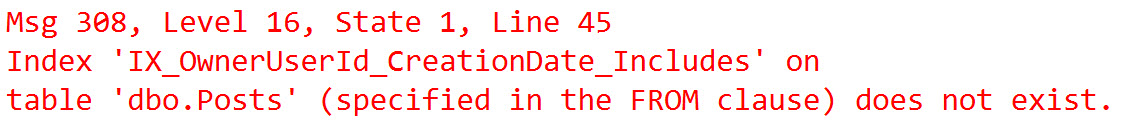

What could be disastrous is if because of the hint, the query optimizer decides to make a totally different plan that isn't nearly as efficient. Or if one day someone drops the hinted index, causing the query with the hint to down right fail:

Index hints can be nice to use in the short-term for investigating, testing, and debugging. However, they are almost never the correct long-term solution for fixing query performance.

Instead, it's better to look for the root-cause of a poor performing query: maybe you need to rebuild stats on an index or determine if the cardinality estimator being used is not ideal. You might also benefit from rewriting a terribly written query.

Any of these options will likely help you create a better, long-term, flexible solutions rather than forcing SQL Server to use the same hard-coded, potentially sub-optimal index forever.