Watch this week's video on YouTube

AT TIME ZONE is great because it makes it easy to perform daylight saving time and time zone conversions in our queries.

However, when using AT TIME ZONE for these types of conversions there are a couple key points to remember to ensure you are getting the correct results. Let's look at an example.

Thanks to reader Jeff Konicky for the inspiration for this post and allowing me to share it.

DST Conversions

This year, Daylight Savings Time kicks in at 2019-03-10 02:00:00 in Eastern Standard Time, meaning that the time zone switches from being 5 hours behind UTC to only 4 hours behind UTC.

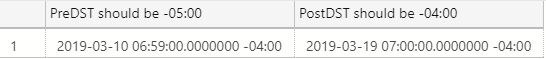

If we have two datetime2s with UTC data, one right before DST kicks in and one right after, we should be able to use AT TIME ZONE to convert these UTC times to Eastern Standard/Daylight Time no problem:

DECLARE

@PreDST datetime2 = '2019-03-10 06:59:00',

@PostDST datetime2 = '2019-03-19 07:00:00';

SELECT

@PreDST AT TIME ZONE 'Eastern Standard Time' AS [PreDST should be -05:00],

@PostDST AT TIME ZONE 'Eastern Standard Time' AS [PostDST should be -04:00];

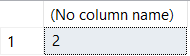

While that sounds great, the results above show it clearly didn't work - both of those datetime2s are showing as already being in daylight saving time (offset of -04:00).

Why did this happen?

SQL Server Doesn't Know Your Data Is In UTC

The fallacy above is that I said our two datetime2's are in UTC, but SQL Server doesn't actually know this. The datetime2 (and datetime) datatype doesn't allow for time zone offsets so SQL Server really doesn't know what time zone the data is in.

Using AT TIME ZONE on a datetime2 without offset information causes SQL Server to "...[assume] that [the datetime] is in the target time zone". That explains why the two datetime2s above, intended to be in UTC, are actually seen as Eastern Daylight Time by SQL Server.

Specifying the Time Zone Offset

So how do we tell SQL Server that our data is in UTC so AT TIME ZONE functions like we want?

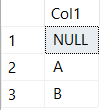

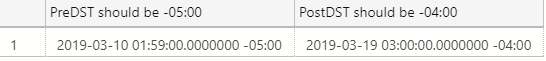

One option is to use the assumption of AT TIME ZONE above in our favor, using it to first convert the datetime2s to UTC and then to EST:

SELECT

@PreDST AT TIME ZONE 'UTC' AT TIME ZONE 'Eastern Standard Time' AS [PreDST should be -05:00],

@PostDST AT TIME ZONE 'UTC' AT TIME ZONE 'Eastern Standard Time' AS [PostDST should be -04:00];

Now we have the correct -05:00 and -04:00 offsets applied.

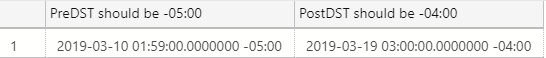

A cleaner solution would be to encode the original data by using the datetimeoffset datatype, which will indicate which time zone the datetimes are stored in:

DECLARE

@PreDST datetimeoffset = '2019-03-10 06:59:00+00:00',

@PostDST datetimeoffset = '2019-03-19 07:00:00+00:00';

SELECT

@PreDST AT TIME ZONE 'Eastern Standard Time' AS [PreDST should be -05:00],

@PostDST AT TIME ZONE 'Eastern Standard Time' AS [PostDST should be -04:00];

Don't Assume

Whenever working with datetime2 (or datetime) in SQL Server, you can't assume SQL Server knows the time zone of your data. Your business logic may indicate that you store dates in UTC, but SQL Server has no way of knowing that without your code explicitly stating it using something like AT TIME ZONE 'UTC' or storing your data in the datetimeoffset datatype.