Watch this week's video on YouTube

This week, I filmed my experience as a first time speaker at PASS Summit in Seattle. No blog post, just a video to relive the experience.

Watch this week's video on YouTube

This week, I filmed my experience as a first time speaker at PASS Summit in Seattle. No blog post, just a video to relive the experience.

Watch this week's video on YouTube

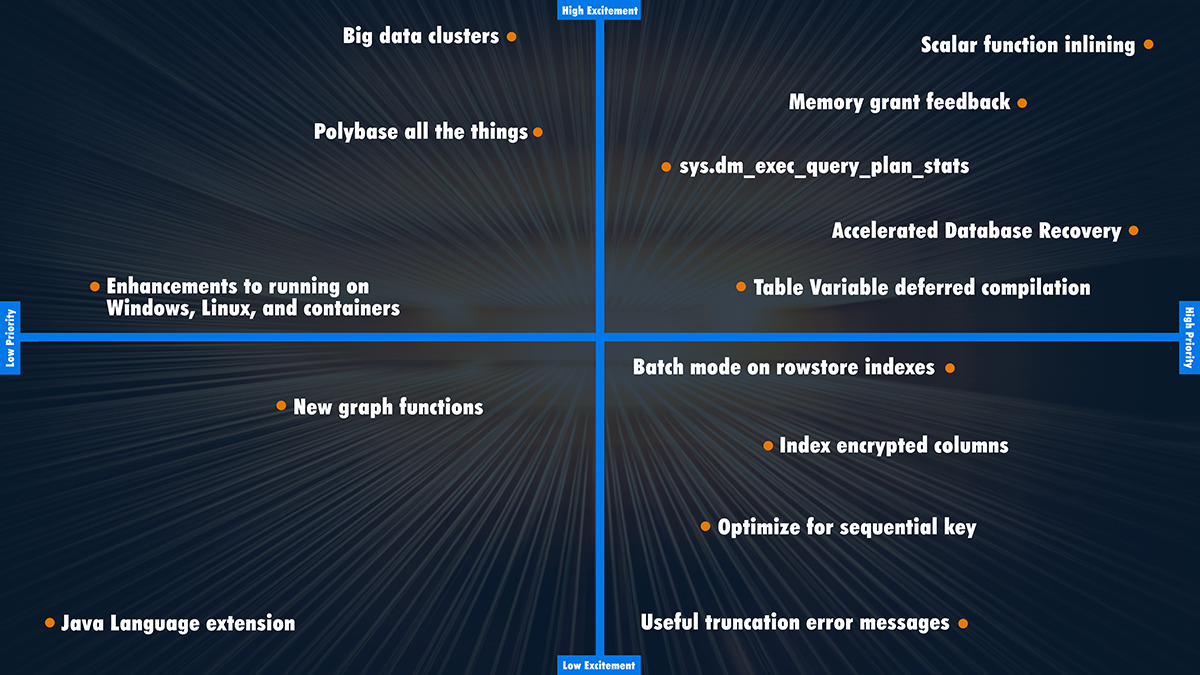

With the release of SQL Server 2019 imminent, I thought it'd be fun to rank which features I am most looking forward to in the new release.

(Also, I needed a lighter blogging week since I'm busy finishing preparing for my two sessions at PASS Summit next week - hope to see you there!).

I decided to rank these features on two axes: Excitement and Priority

Excitement is easy to describe: how excited I am about using these features. In my case, excitement directly correlates with performance and developer usability improvements. That doesn't mean "Low Excitement" features aren't beneficial; on the contrary, many are great, they just don't top my list (it wouldn't be fun to have a quadrant with everything in the top right).

Priority is how quickly I'll work on implementing or tuning these features. The truth is that some of these features will work automatically once a SQL Server instance is upgraded, while some will require extra work (ie. query rewriting, hardware config). Once again, "Low Priority" features aren't bad, they just won't be the features that I focus on first.

Finally, these rankings are based on Microsoft's descriptions of these features and what little tinkering I've done with pre-releases of SQL Server 2019. As far as I know, this chart will totally change once I start using these features regularly in production environments.

And here are my rankings in list form in case that's more your style:

What are you most excited for in 2019? What features did I miss? Disagree with where something should be ranked? Let me know in the comments below.

Watch this week's video on YouTube

SQL Server has several ways to store queries for later executions.

This makes developers happy because it allows them to follow DRY principles: Don't Repeat Yourself. The more code you have, the more difficult it is to maintain. Centralizing frequently used code into stored procedures, functions, etc... is attractive.

While following the DRY pattern is beneficial in many programming languages, it can often cause poor performance in SQL Server.

Today's post will try to explain all of the different code organization features available in SQL Server and when to best use them (thank you to dovh49 on YouTube for recommending this week's topic and reminding me how confusing all of these different features can be when first learning to use them).

CREATE OR ALTER FUNCTION dbo.GetUserDisplayName

(

@UserId int

)

RETURNS nvarchar(40)

AS

BEGIN

DECLARE @DisplayName nvarchar(40);

SELECT @DisplayName = DisplayName FROM dbo.Users WHERE Id = @UserId

RETURN @DisplayName

END

SELECT TOP 10000 Title, dbo.GetUserDisplayName(OwnerUserId) FROM dbo.Posts

Scalar functions run statements that return a single value.

You'll often read about SQL functions being evil, and scalar functions are a big reason for this reputation. If your scalar function executes a query within it to return a single value, that means every row that calls that function runs this query. That's not good if you have to run a query once for every row in a million row table.

SQL Server 2019 can inline a lot of these, providing better performance in most cases. However, you can already do this yourself today by taking your scalar function and including it in your calling query as a subquery. The only downside is that you'll be repeating that same logic in every calling query that needs it.

Additionally, using a scalar function on the column side of a predicate will prevent SQL Server from being able to seek to data in any of its indexes; talk about performance killing.

For scalar functions that don't execute a query, you can always use WITH SCHEMABINDING to gain a performance boost.

CREATE OR ALTER FUNCTION dbo.SplitTags

(

@PostId int

)

RETURNS TABLE

AS

RETURN

(

SELECT REPLACE(t.value,'>','') AS Tags

FROM dbo.Posts p

CROSS APPLY STRING_SPLIT(p.Tags,'<') t

WHERE Id = @PostId AND t.value <> ''

)

GO

SELECT * FROM dbo.SplitTags(4)

Inline table-valued functions allow a function to return a table result set instead of just a single value. They essentially are a way for you to reuse a derived table query (you know, when you nest a child query in your main query's FROM or WHERE clause).

These are usually considered "good" SQL Server functions - their performance is decent because SQL Server can get relatively accurate estimates on the data that they will return, as long as the statistics on that underlying data are accurate. Generally this allows for efficient execution plans to be created. As a bonus, they allow parameters so if you find yourself reusing a subquery over and over again, an inline table-valued function (with or without a parameter) is actually a nice feature.

CREATE OR ALTER FUNCTION dbo.GetQuestionWithAnswers

(

@PostId int

)

RETURNS

@results TABLE

(

PostId bigint,

Body nvarchar(max),

CreationDate datetime

)

AS

BEGIN

-- Returns the original question along with all of its answers in one result set

-- Would be better to do this with something like a union all or a secondary join.

-- But this is an MSTVF demo, so I'm doing it with multiple statements.

-- Statement 1

INSERT INTO @results (PostId,Body,CreationDate)

SELECT Id,Body,CreationDate

FROM dbo.Posts

WHERE Id = @PostId;

-- Statement 2

INSERT INTO @results (PostId,Body,CreationDate)

SELECT Id,Body,CreationDate

FROM dbo.Posts

WHERE ParentId = @PostId;

RETURN

END

SELECT * FROM dbo.GetQuestionWithAnswers(4)

Multi-statement table-valued functions at first glance look and feel just like their inline table-value function cousins: they both accept parameter inputs and return results back into a query. The major difference is that they allow multiple statements to be executed before the results are returned in a table variable:

This is a great idea in theory - who wouldn't want to encapsulate multiple operational steps into a single function to make their querying logical easier?

However, the major downside is that prior to SQL Server 2017, SQL Server knows nothing about what's happening inside of a mutli-statement table-valued function in the calling query. This means all of your estimates for MSTVFs will be 100 rows (1 if you are on a version prior to 2014, slightly more accurate if you are on versions 2017 and above). This means that execution plans generated for queries that call MSTVFs will often be...less than ideal. Because of this, MSTVFs help add to the "evil" reputation of SQL functions.

CREATE OR ALTER PROCEDURE dbo.InsertQuestionsAndAnswers

@PostId int

AS

BEGIN

SET NOCOUNT ON;

INSERT INTO dbo.Questions (Id)

SELECT Id

FROM dbo.Posts

WHERE Id = @PostId;

INSERT INTO dbo.Answers (Id, PostId)

SELECT Id, ParentId

FROM dbo.Posts

WHERE ParentId = @PostId;

END

EXEC dbo.InsertQuestionsAndAnswers @PostId = 4

Stored procedures encapsulate SQL query statements for easy execution. They return result sets, but those result sets can't be easily used within another query.

This works great when you want to define single or multi-step processes in a single object for easier calling later.

Stored procedures also have the added benefit of being able to have more flexible security rules placed on them, allowing users to access data in specific ways where they don't necessarily have access to the underlying sources.

CREATE OR ALTER VIEW dbo.QuestionsWithUsers

WITH SCHEMABINDING

AS

SELECT

p.Id AS PostId,

u.Id AS UserId,

u.DisplayName

FROM

dbo.Posts p

INNER JOIN dbo.Users u

ON p.OwnerUserId = u.Id

WHERE

p.PostTypeId = 1;

GO

CREATE UNIQUE CLUSTERED INDEX CL_PostId ON dbo.QuestionsWithUsers (PostId);

SELECT * FROM dbo.QuestionsAndAnswersView;

Views are similar to inline table valued function - they allow you centralize a query in an object that can be easily called from other queries. The results of the view can be used as part of that calling query, however parameters can't be passed in to the view.

Views also have some of the security benefits of a stored procedure; they can be granted access to a view with a limited subset of data from an underlying table that those same users don't have access to.

Views also have some performance advantages since they can have indexes added to them, essentially materializing the result set in advance of the view being called (creating faster performance). If considering between an inlined table function and a view, if you don't need to parameterize the input, a view is usually the better option.

CREATE TABLE dbo.QuestionsStaging (Id int PRIMARY KEY NONCLUSTERED) WITH ( MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_ONLY );

CREATE TABLE dbo.AnswersStaging (Id int PRIMARY KEY NONCLUSTERED, PostId int) WITH ( MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_ONLY );

GO

CREATE PROCEDURE dbo.InsertQuestionsAndAnswersCompiled

@PostId int

WITH NATIVE_COMPILATION, SCHEMABINDING

AS BEGIN ATOMIC WITH

(

TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english'

)

INSERT INTO dbo.Questions (Id)

SELECT Id

FROM dbo.Posts

WHERE Id = @PostId;

INSERT INTO dbo.Answers (Id, PostId)

SELECT Id, ParentId

FROM dbo.Posts

WHERE ParentId = @PostId;

END

These are same as the stored procedures and scalar functions mentioned above, except they are pre-compiled for use with in-memory tables in SQL Server.

This means instead of SQL Server interpreting the SQL query every time a procedure or scalar function has to run, it created the compiled version ahead of time reducing the startup overhead of executing one of these objects. This is a great performance benefit, however they have several limitations. If you are able to use them, you should, just be aware of what they can and can't do.

While writing this post I thought about when I was first learning all of these objects for storing SQL queries. Knowing the differences between all of the options available (or what those options even are!) can be confusing. I hope this post helps ease some of this confusion and helps you choose the right objects for storing your queries.

Watch this week's video on YouTube

A few months ago I was presenting for a user group when someone asked the following question:

Does a query embedded in a stored procedure execute faster than that same query submitted to SQL Server as a stand alone statement?

The room was pretty evenly split on the answer: some thought the stored procedures will always perform faster while others thought it wouldn't really matter.

In short, the answer is that the query optimizer will treat a query defined in a stored procedure exactly the same as a query submitted on its own.

Let's talk about why.

While submitting an "EXEC <stored procedure>" statement to SQL Server may require fewer packets of network traffic than submitting the several hundred (thousands?) lines that make up the query embedded in the procedure itself, that is where the efficiencies of a stored procedure end*.

*NOTE: There are certain SQL Server performance features, like temporary object caching, natively compiled stored procedures for optimized tables, etc… that will improve the performance of a stored procedure over an ad hoc query. However in my experience, most people aren't utilizing these types of features so it's a moot point.

After receiving the query, SQL Server's query optimizer looks at these two submitted queries exactly the same. It will check to see if a cached plan already exists for either query (and if one does, it will use that), otherwise it will send both queries through the optimization process to find a suitable execution plan. If the standalone query and the query defined in the stored procedure are exactly the same, and all other conditions on the server are exactly the same at the time of execution, SQL Server will generate the same plans for both queries.

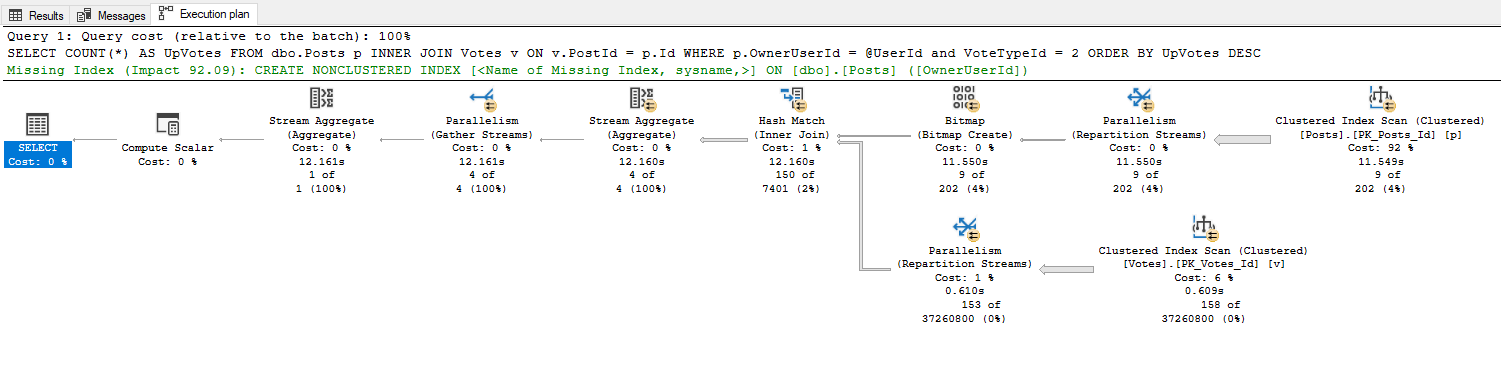

To prove this point, let's look at the following query's plan as well as the plan for a stored procedure containing that same query:

CREATE OR ALTER PROCEDURE dbo.USP_GetUpVotes

@UserId int

AS

SELECT

COUNT(*) AS UpVotes

FROM

dbo.Posts p

INNER JOIN Votes v

ON v.PostId = p.Id

WHERE

p.OwnerUserId = @UserId

and VoteTypeId = 2

ORDER BY UpVotes DESC

EXEC dbo.USP_GetUpVotes 23

DECLARE @UserId int = 23

SELECT

COUNT(*) AS UpVotes

FROM

dbo.Posts p

INNER JOIN Votes v

ON v.PostId = p.Id

WHERE

p.OwnerUserId = @UserId

and VoteTypeId = 2

ORDER BY UpVotes DESC

As you can see, the optimizer generates identical plans for both the standalone query and the stored procedure. In the eyes of SQL Server, both of these queries will be executed in exactly the same way.

I think that a lot of the confusion for thinking that stored procedures execute faster comes from caching.

As I wrote about a little while back, SQL Server is very particular about needing every little detail about a query to be exactly the same in order for it to reuse its cached plan. This includes things like white space and case sensitivity.

It is much less likely that a query inside of a stored procedure will change compared to a query that is embedded in code. Because of this, it's probably more likely that your stored procedure plans are being ran from cached plans while your individually submitted query texts may not be utilizing the cache. Because of this, the stored procedure may in fact be executing faster because it was able to reuse a cached plan. But this is not a fair comparison - if both plans would pull from the cache, or if both plans had to generate new execution plans, they would both have the same execution performance.

So while in the majority of cases a standalone query will perform just as quickly as that same query embedded in a store procedure I still think it's better to use stored procedures when possible.

First, embedding your query inside of a stored procedure increases the likelihood that SQL Server will reuse that query's cached execution plan as explained above.

Secondly, using stored procedures is cleaner for organization, storing all of your database logic in one location: the database itself.

Finally, and most importantly, using stored procedures gives your DBA better insight into your queries. Storing a query inside of a stored procedure means your DBA can easily access and analyze it, offering suggestions and advice on how to fix it in case it is performing poorly. If your queries are all embedded in your apps instead, it makes it harder for the DBA to see those queries, reducing the likelihood that they will be able to help you fix your performance issues in a timely manner.

This post is a response to this month's T-SQL Tuesday #119 prompt by Alex Yates. T-SQL Tuesday is a way for the SQL Server community to share ideas about different database and professional topics every month.

This month's topic asks to write about what in your career that you have changed your mind about.

Watch this week's video on YouTube

In my first years as a developer, I used to think that being a great programmer meant you knew the latest technologies, followed the best design patterns, and used the trendiest tools. Because of this, I focused my time strictly on those topics.

On the flip side, what I didn't spend a lot of time on were communication skills. I figured as long as I could write an email without too many typos or if I could coherently answer a question someone asked of me, then communication was not something I had to focus on.

Over the years I've changed my mind about this however: communication skills are often just as important (if not sometimes more important) as any of the other technical skills a great programmer uses.

In my experience, I've found three major improvements from spending some of my learning time on improving my communication skills:

Nothing is worse than spending a significant amount of time talking about ideas, requirements, and next steps, only to have to go through all of it again because not everyone had the same understanding. Not only does this waste valuable time, it may result in development rework and cause frustration among team members.

Unclear communication, or lack of communication altogether, leaves doubt in the minds of those you work with. Not communicating clearly about your own progress leaves teammates questioning if your dependency will allow them to finish their work on time, makes your manager question whether the project will be delayed, and makes your customers concerned about the stability of the product.

Taking a proactive approach to communicating clearly sets people's expectations up front, leaving little room for any doubt.

Once you learn to communicate more effectively, you start to notice when others aren't doing the same. This allows you to recognize potential problems or unclear information from the start. Once you start recognizing this, you'll be able to reframe a question or follow up with more specifics, resulting in your needs getting met quicker.

Below are a few techniques that have helped me become a better communicator. I'm by no means an expert, but I have found these few things to work really well for my situation.

Before sending an email, reread it from the perspective of everyone you are sending it to. Even though everyone will be receiving the same exact text, their interpretation may vary based on their background or context. You will be doing yourself a huge favor if you can edit your message so that each imagined perspective interprets the message in the same way.

Be ruthless with self-editing before you speak and with cutting unnecessary thoughts in written form. When writing, put your most important ideas or requests first. If anything needs to be seen beyond the first line, call it out by bolding the text or highlighting it. Don't risk someone skipping over the important parts of your message.

People are busy. You aren't always a priority. While you have to handle it politely, reminding someone that you need something from them is important. If you communicated clearly from the outset, this is easy to do. If you reread your initial request and realize it wasn't as clear as it could be, this is your opportunity to do a better job.

The best way to get better at communicating is through practice. Be intentional about it. Write only what matters and speak as succinctly when it comes to project work. I'm far from being where I want to be in these respects, but every time I write an email, write a video script, or speak up in a team meeting, I want to make sure what I'm saying is clear and as easy to understand as possible.