Watch this week's video on YouTube

Looking for a script to find possible SQL injection vulnerabilities on your server? Scroll to the bottom of this post.

OWASP names SQL injection as the #1 vulnerability for web applications. The infamy of this attack has even made its way into the popular XKCD comic.

What is SQL injection?

A SQL query is vulnerable to SQL injection if a user can run a query other than the one that was originally intended.

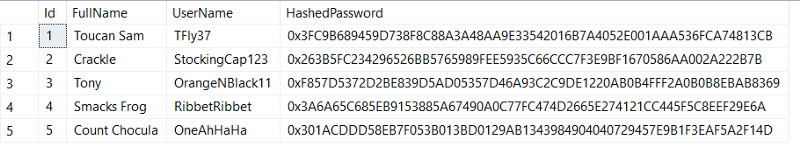

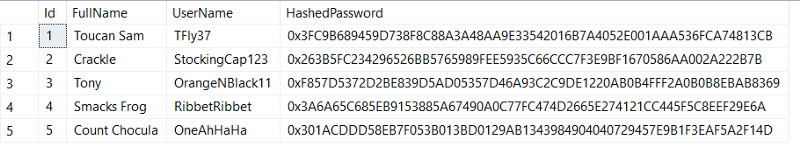

Sometimes SQL injection is easier to understand with an example. Let's use this table of registered users:

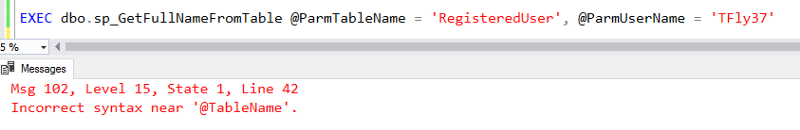

And then let's create a simple stored procedure that will query that table:

CREATE PROCEDURE dbo.sp_GetFullName

@ParmUserName varchar(100)

AS

BEGIN

DECLARE @FullQuery varchar(1000)

SET @FullQuery = 'SELECT FullName FROM dbo.RegisteredUser WHERE UserName = ''' + @ParmUserName + ''''

EXEC(@FullQuery);

END

The important thing to note in the query above is that we are generating a dynamic SQL statement; that is, we are building the SQL query string, and then we are executing it.

Imagine this stored procedure is running in order to display a "Welcome !" message in our app — a website visitor types in their@ParmUserName and we execute the stored procedure to return their full name.

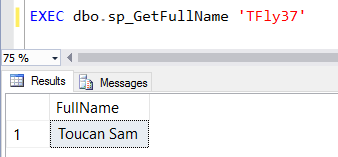

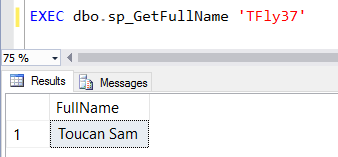

Here's our code that calls the stored procedure:

EXEC dbo.sp_GetFullName 'TFly37'

And result:

Cool. No problems so far.

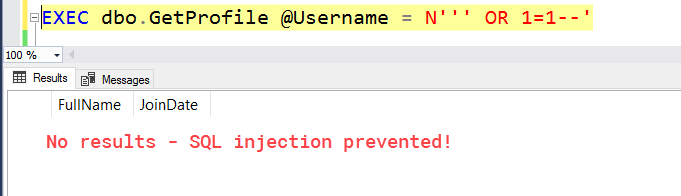

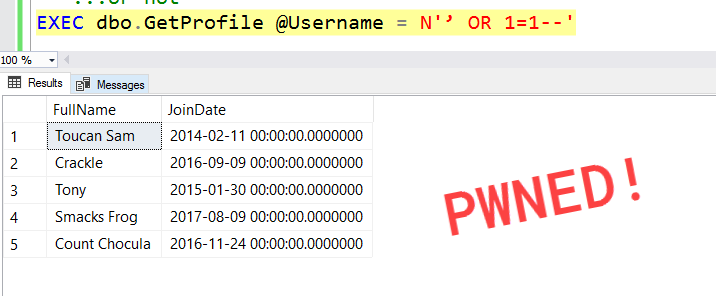

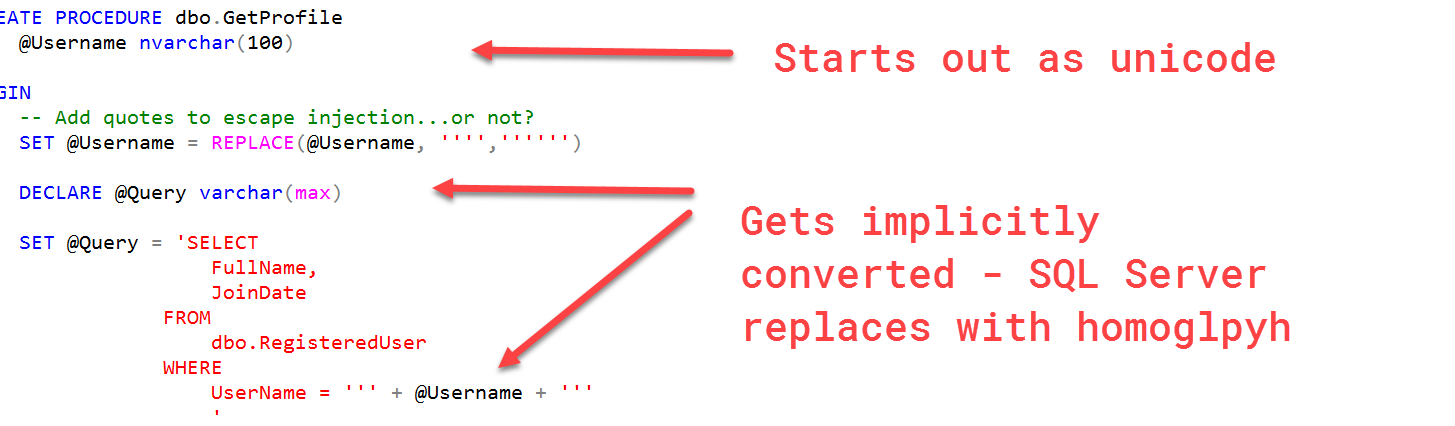

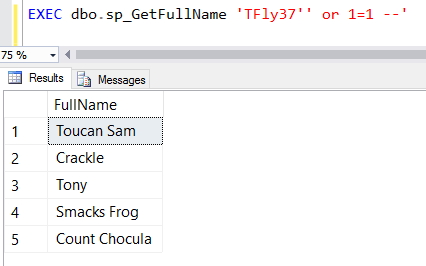

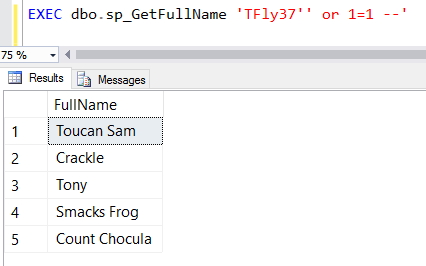

However, what if our user decides to pass in the following value as their username?

EXEC dbo.sp_GetFullName 'TFly37'' or 1=1 --'

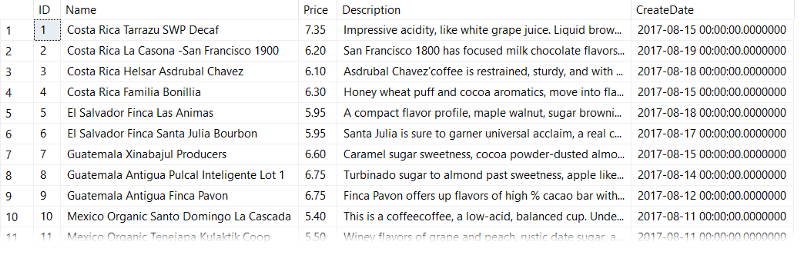

This funny looking parameter value returns this:

AHHHHHH!!!!

This user just hacked our website and viewed all of the users in our table.

In this specific case only our user's full names were breached, but in other instances it's just as easy for more sensitive data like passwords, social security numbers, and bank account numbers to be revealed as well (If you are looking for some fun, search for "SQL injection" on the Have I been pwned? list of Pwned websites to see all of the companies that aren't doing a good job protecting your privacy).

So how did that example of SQL injection actually work?

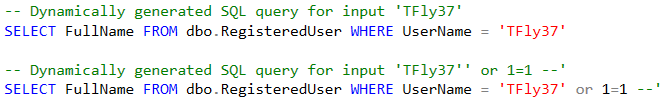

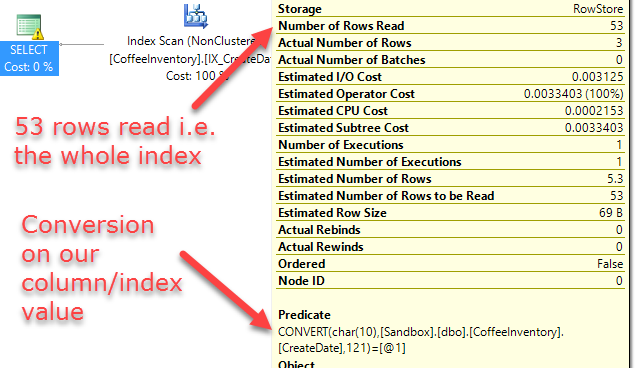

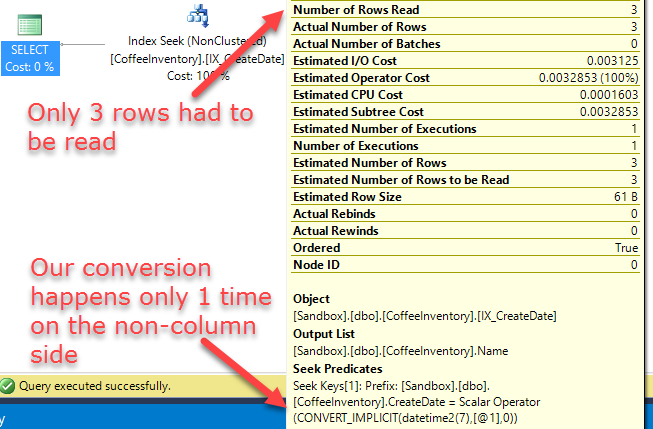

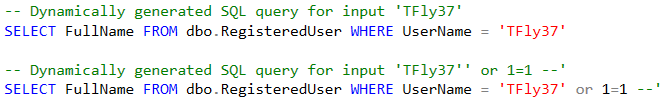

Since our stored procedure executes a dynamically generated query, let's look at what that generated query actually looks like for both of the parameters that were passed in:

Even though TFly37'' or 1=1-- doesn't look like a intelligible input parameter, when its concatenated into our query it makes sense.

Our malicious user is basically writing their own SQL query, one that will return all of the names of our users instead of just their own. In many instances, the crafty hacker can go a step further and write additional injection code to reveal the contents of the entire user table (or other tables in the database)!

How do I prevent SQL injection?

Simple: don't concatenate unsanitized user input data to your SQL queries.

In this example, this is easy to do: simply rewrite the stored procedure to not use dynamic SQL:

CREATE PROCEDURE dbo.sp_GetFullNameSafe

@ParmUserName varchar(100)

AS

BEGIN

SELECT FullName FROM dbo.RegisteredUser WHERE UserName = @ParmUserName

END

When you don't have dynamic SQL, you can't be affected by SQL injection attempts.

Avoiding dynamic SQL isn't always possible though, so what can we do in those cases? Use sp_executesql:

CREATE PROCEDURE dbo.sp_GetFullNameSafe2

@ParmUserName varchar(100)

AS

BEGIN

DECLARE @FullQuery nvarchar(1000)

SET @FullQuery = N'SELECT FullName FROM dbo.RegisteredUser WHERE UserName = @UserName'

DECLARE @ParmDefinition nvarchar(100) = N'@UserName varchar(100)';

EXEC sp_executesql @FullQuery, @ParmDefinition,

@UserName = @ParmUserName;

END

sp_executesql allows for parameterization of your dynamic SQL, removing the possibility of unwanted code injection.

Are any of my current SQL queries vulnerable to SQL injection attacks?

So SQL injection is really bad and you don't want to become like Sony or Yahoo. How do we check to see if we have any vulnerable queries on our server?

I wrote the query below to help you start searching. It is not perfect, but it does act as a good starting point for auditing potentially injectable queries:

-- This file tries to find stored procedures and functions that *may* be vulnerable to SQL injection attacks.

-- It works by searching your database for occurences of "+" signs followed by "@", indicating that SQL parameters

-- might be getting concatenated to a dynamic SQL string. It also checks for the existence of 'EXEC' to see if any

-- strings are being executed.

-- Not every result returned will be susceptible to SQL injection, however they should all be examined to see if they are vulnerable.

-- Originally fromn: https://github.com/bertwagner/SQLServer/blob/master/SQL%20Injection%20Vulnerabilities.sql

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED

SELECT

ROUTINE_CATALOG,

ROUTINE_SCHEMA,

ROUTINE_NAME,

ROUTINE_TYPE,

ROUTINE_DEFINITION

FROM

INFORMATION_SCHEMA.ROUTINES

WHERE

REPLACE(REPLACE(REPLACE(REPLACE(REPLACE(REPLACE(REPLACE(REPLACE(REPLACE(ROUTINE_DEFINITION,CHAR(0),''),CHAR(9),''),CHAR(10),''),CHAR(11),''),CHAR(12),''),CHAR(13),''),CHAR(14),''),CHAR(160),''),' ','')

LIKE '%+@%'

AND

( -- Only if executes a dynamic string

ROUTINE_DEFINITION LIKE '%EXEC(%'

OR ROUTINE_DEFINITION LIKE '%EXECUTE%'

OR ROUTINE_DEFINITION LIKE '%sp_executesql%'

)

I've found this script useful for myself, however if you find any issues with it please let me know, thanks!