How many times have you written a program, ETL, analysis job, etc… that seemed like it would never finish running?

Although poor performance can be caused in a multitude of ways, the easiest to fix is by reducing your data in SQL Server instead of your in your programming/ETL/analysis layer (Excel, R, SAS, Python, ..NET, etc…).

SQL is built to handle and process data extremely efficiently. You will usually experience much better performance the more work (data merging, transformations, etc…) you can do to your data on the SQL server. I say "usually" because SQL won't always be faster than a programming language at transforming data, but 9 times out of 10 you can get faster results straight on the SQL Server.

Watch this week's video on YouTube

Let's look at one of my crappy processes

How many of us have ever written a process that does something like this:

1. Write the most basic query possible, something like SELECT * FROM dbo.User

2. Take the output of the above query, load it into Excel/SAS/Python/.NET/etc…

3. Write some code to filter the dataset

4. Write some code to summarize the data, transform columns, etc…

5. Write another SELECT * FROM dbo.Sale against the SQL Server to bring in more data

6. Bring it into Excel/SAS/Python/.NET/etc… and merge it with our original data

7. Repeat steps 3–6 as many times as needed

Some of my earliest PHP and MySQL websites worked exactly like this 😳! The code was slow on my server and users ended up suffering with slow webpage load times.

If the above process even slightly resembles something you've written before, continue reading on…

Why bother learning to transform data in SQL? I already know how to do that stuff in .

Old habits are hard to break, but you do want to make your processes run faster, right? This stuff is all easy, I promise!

Basically, if you are running code similar to above, the reason your job is slow is because you are not optimizing where your work is being performed:

- Every time you write

SELECT * you probably are bringing back more data than you actually need — you are hurting your performance.

- Every time you don't have a

WHERE clause, you are hurting your performance.

- Every time your process queries the database multiple times (ie. multiple

SELECT statements in your job to bring back data), you are hurting your performance.

In case you missed it, not taking the time to filter and reduce your data down as much as possible in your SQL is hurting your performance! Assuming your SQL Server and your programming layer are on different machines, you lose lots of time transferring unnecessary data over the wires (or air) as well as not efficiently using all of the advantages that your SQL server offers.

What's the solution to this inefficient processing?

Process your data on the SQL Server!

If you are not filtering, joining, and transforming your data until your programming layer, you are likely losing valuable SQL performance power and network efficiency. Here are some easy ways to reduce the size of your dataset on the SQL Server to improve performance in your jobs (and make your coworkers envious of your skills)!

SELECT [ColumnName]

If you are using SELECT *, stop!

SELECT * brings back all of the columns on your table, including the ones you don't need. This increases the amount of data sent over the network (which doesn't even get used) as well as increases the amount of data that needs to be read from disk (and storage hardware is usually relatively slow). Not to mention if your table is using indexes, SELECT * most likely causes some of those indexes not to be used as efficiently (or at all) which causes your queries to slow down even further.

But what if you do need all of the columns on a particular table? You still shouldn't use SELECT *! Although there's no performance difference, using SELECT * just means you are taking on technical debt. In the future, when a column gets added or removed from your table, your downstream processes may break because they are now automatically receiving (or no longer receiving) that column. Do you want to have to fix a failing process in the future because its now receiving more data that it was expecting? I don't think so!

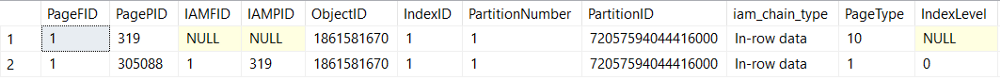

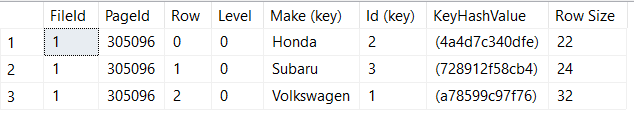

JOINs

My inefficient process example above starts with selecting some data and bringing it into my programming environment. The process then runs another query to bring in additional data and joins it to the data from my first query in my programming environment.

This is terrible!

First off, we are breaking the first principle we learned in the SELECT * section above — we are bringing back more data than we need! If we are using INNER JOIN on our two datasets, we most likely are going to be filtering out some data — data we don't need. Joining on the SQL server first will reduce our total dataset size and make our network and disk performance more efficient.

Even if we are doing something like a LEFT or FULL OUTER join where we will be keeping all of the data from one or both of our datasets, it still benefits us to perform this join on the SQL Server. Why you ask? Because the people who built SQL Server have spent hundreds or thousands of hours performance tuning and debugging their joining algorithms. The chances that you will be able to write a more efficient join algorithm is highly unlikely.

And even if you are a programming savant, why reinvent the wheel? Unless your app needs every last microsecond of performance, just use SQL Server for what it's really good at: relational data joining.

WHERE Clauses

Let's say our dbo.User table has 50 thousand rows and our dbo.Sale table has 1 million rows. If your process is only looking for active users and sales from the past month, let's say 2 thousand rows and 22,000 rows respectively, then you are causing SQL to lookup and transfer 95% more rows than your process needs. Not only does it kill network performance, but your program layer then needs to filter out this data, doing extra work that it probably can't do as efficiently as SQL Server.

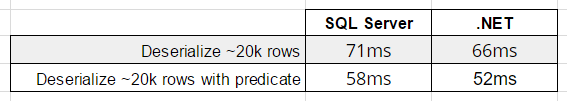

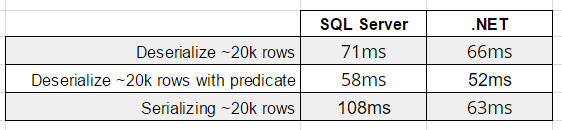

If instead I would have just added predicates to the SQL WHERE clause like Active=1 and SalesDate >= DATEADD(month, -1, GETDATE()) we would have saved both time and bandwidth.

Aggregate Functions

You know what's better than sending 10,000 rows of data over the network and then summing them up in your programming layer?

Using SQL's SUM() aggregate function to reduce those 10,000 rows to just 1 row before sending it across the network.

SQL aggregate functions take many rows of data and consolidate them down into fewer rows.

SQL's aggregate functions are also flexible enough to use the OVER() clause, allowing for windowed sets within your data — basically allowing you to be even more flexible with how you aggregate your data.

Don't wait until your application layer to summarize parts of your data — do it in your SQL query instead.

Scalar Functions

Although aggregate functions do some serious heavy lifting, scalar functions that run on each row of data aren't anything to laugh at either. Although they won't reduce the number of rows in your output, they can certainly reduce the number of columns you are outputting.

For example, say you have multiple columns of data in your dataset that ultimately need to be combined into a single output column. It's much better to use ISNULL(), COALESCE(), or CASE to combine multiple columns into a single column with logic in your SQL query so less data needs to be transferred later.

Once again, reducing the amount of data you are sending over the network is key to getting faster run times.

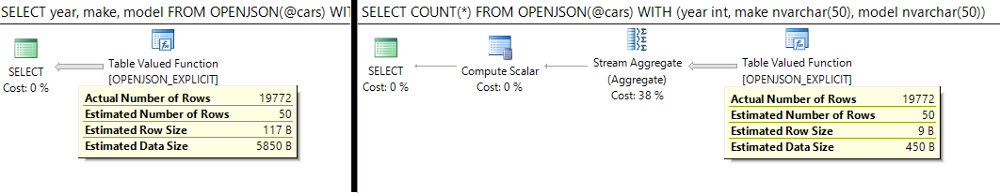

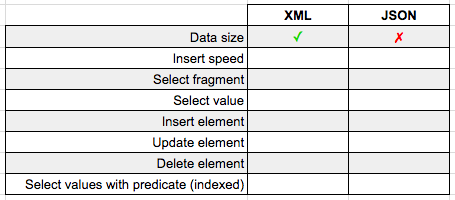

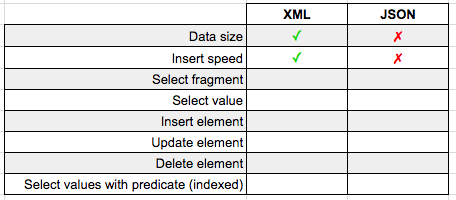

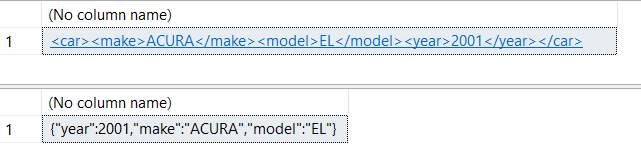

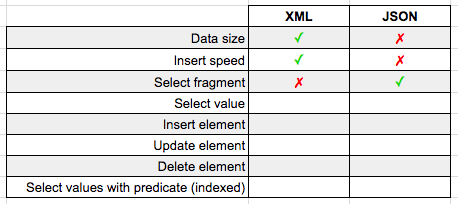

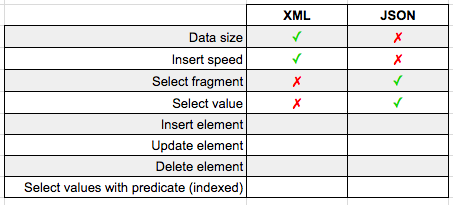

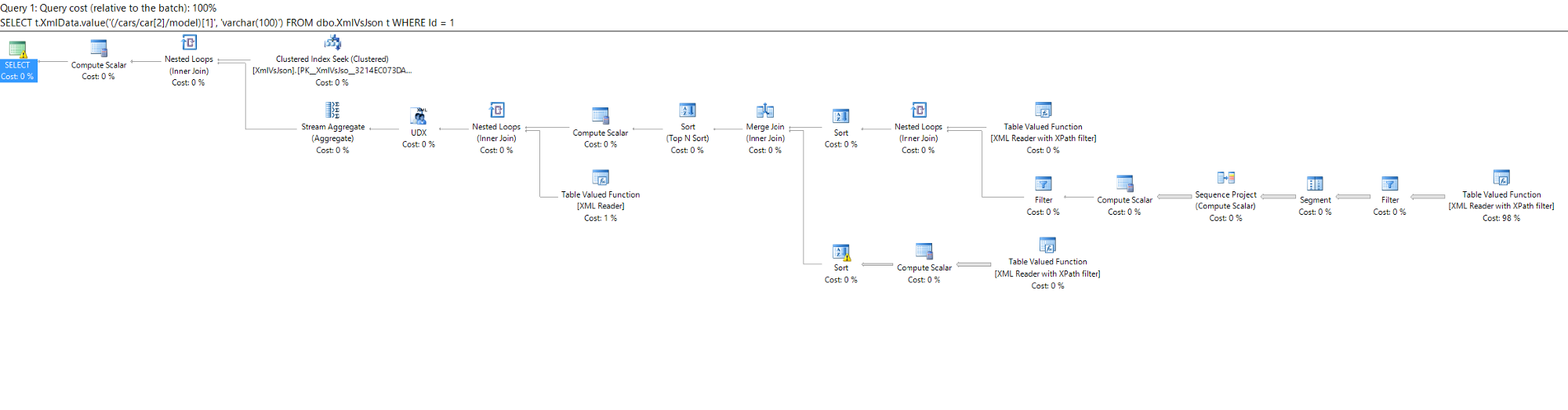

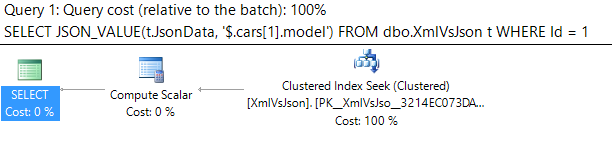

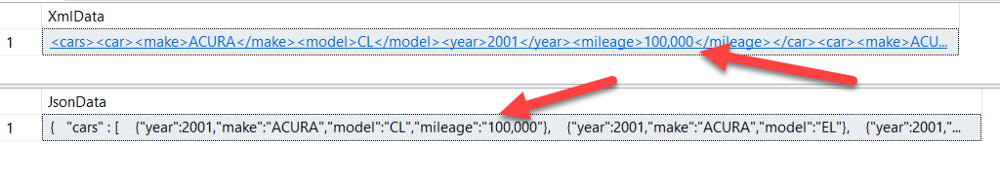

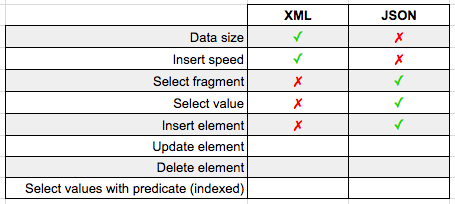

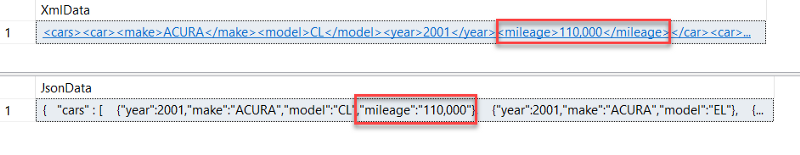

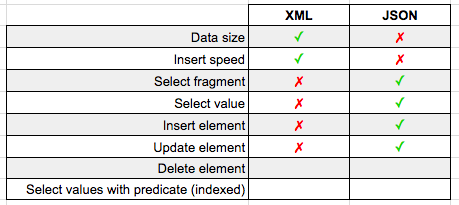

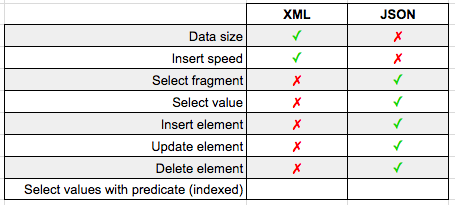

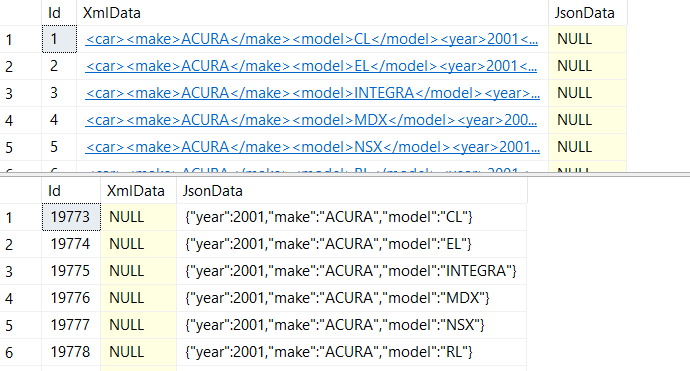

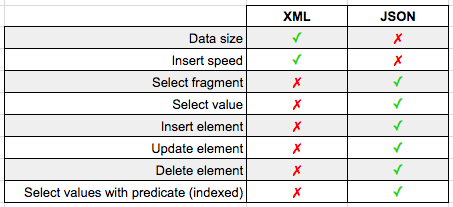

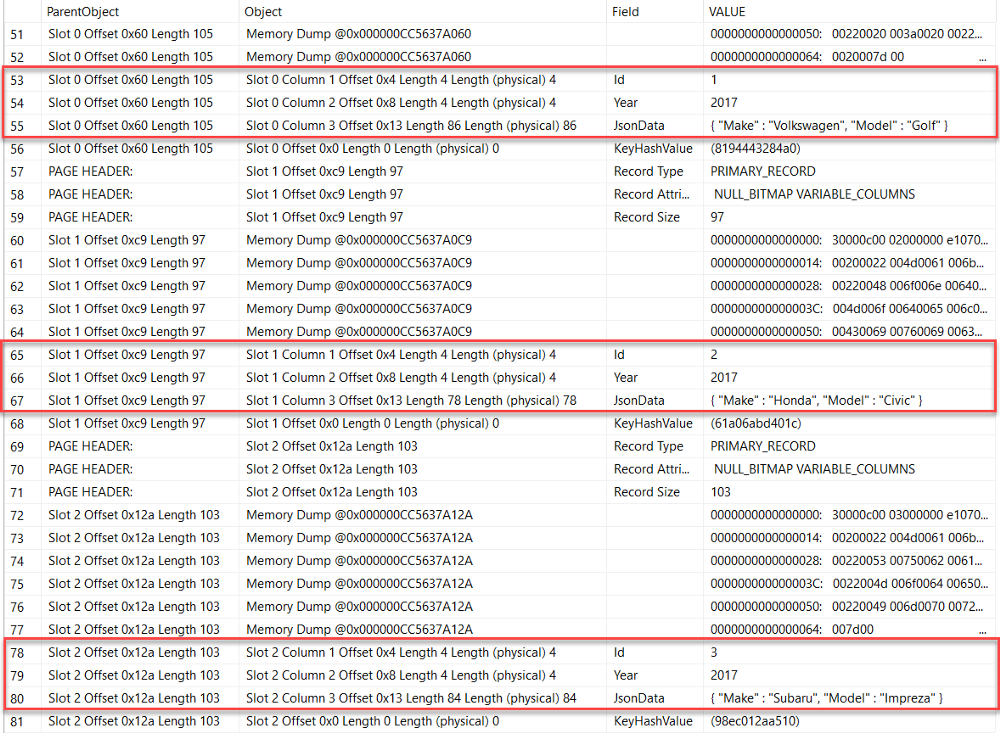

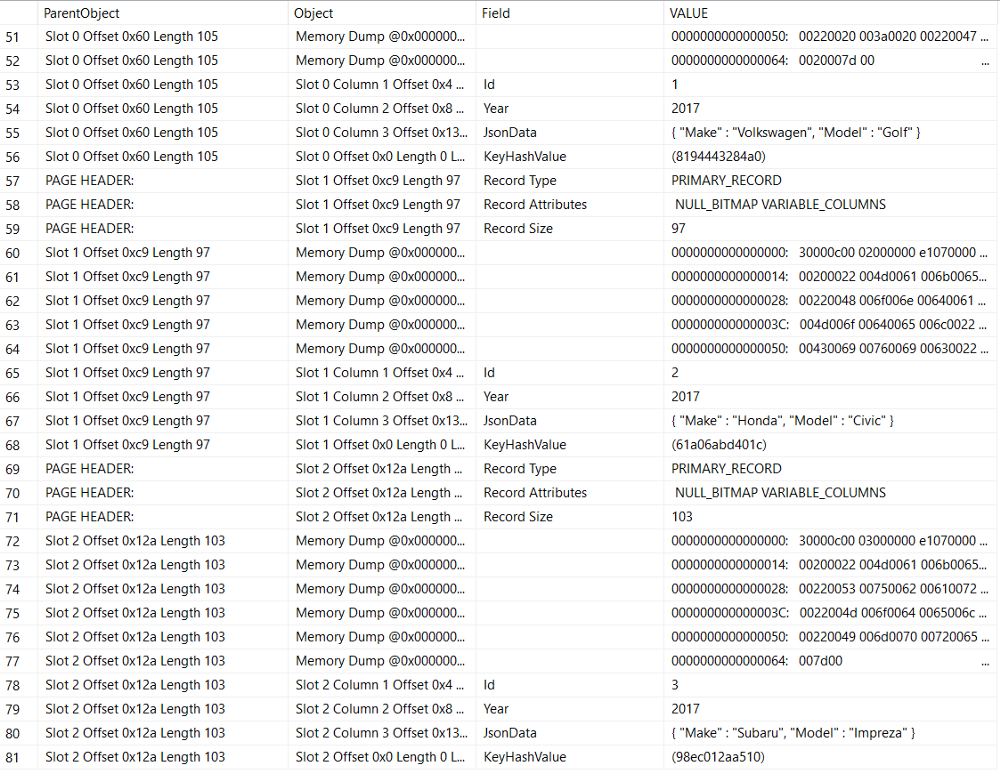

XML and JSON Functions

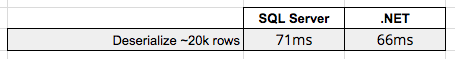

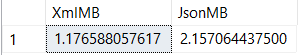

Last but not least, if your process is generating XML or JSON data at some point, consider generating that data on the SQL Server. Now, generating XML and JSON data won't always improve your performance — SQL Server is best at relational tasks and not large string creation — but in many cases, especially with JSON, SQL Server can outperform even the fastest .NET libraries.

If your network is your bottle neck, then it is very possible that SQL can apply complex logic and transform your data into XML or JSON faster on the SQL Server than if you needed to transfer all of that data to another location on the network and handle those transformations in another programming language.

In short: do as much work as possible in SQL

If your SQL queries could be following any of the above techniques and they're not, then fix them…today! Checking each of your queries for any of the above inefficiencies and mitigating them will probably (always test your changes) improve the performance of your applications and processes.

And then it won't feel like your process is taking forever to run.