Watch this week's video on YouTube

When In-Memory OLTP was first released in SQL Server 2014, I was excited to start using it. All I could think was "my queries are going to run so FAST!"

Well, I never got around to implementing In-Memory OLTP. Besides having an incompatible version of SQL Server at the time, the in-memory features had too many limitations for my specific use-cases.

Fast forward a few years, and I've done nothing with In-Memory OLTP. Nothing that is until I saw Erin Stellato present at our Northern Ohio SQL Server User Group a few weeks ago - her presentation inspired me to take a look at In-Memory OLTP again to see if I could use it.

Use case: Improving ETL staging loads

After being refreshed on the ins and outs of in-memory SQL Server, I wanted to see if I could apply some of the techniques to one of my etls.

The ETL consists of two major steps:

- Shred documents into row/column data and then dump that data into a staging table.

- Delete some of the documents from the staging table.

In the real world, there's a step 1.5 that does some processing of the data, but it's not relevant to these in-memory OLTP demos.

So step one was to create my staging tables. The memory optimized table is called "NewStage1" and the traditional disked based tabled is called "OldStage1":

DROP DATABASE IF EXISTS InMemoryTest;

GO

CREATE DATABASE InMemoryTest;

GO

USE InMemoryTest;

GO

ALTER DATABASE InMemoryTest ADD FILEGROUP imoltp_mod CONTAINS MEMORY_OPTIMIZED_DATA;

GO

ALTER DATABASE InMemoryTest ADD FILE (name='imoltp_mod1', filename='C:\Program Files\Microsoft SQL Server\MSSQL14.MSSQLSERVER\MSSQL\DATA\imoltp_mod1') TO FILEGROUP imoltp_mod;

GO

ALTER DATABASE InMemoryTest SET MEMORY_OPTIMIZED_ELEVATE_TO_SNAPSHOT=ON;

GO

ALTER DATABASE InMemoryTest SET RECOVERY SIMPLE

GO

--Numbers Table

-- This needs to be in-memory to be called from a natively compiled procedure

DROP TABLE IF EXISTS InMemoryTest.dbo.Numbers;

GO

CREATE TABLE InMemoryTest.dbo.Numbers

(

n int

INDEX ix_n NONCLUSTERED HASH (n) WITH (BUCKET_COUNT=400000)

)

WITH (MEMORY_OPTIMIZED=ON, DURABILITY=SCHEMA_ONLY);

GO

INSERT INTO dbo.Numbers (n)

SELECT TOP (4000000) n = CONVERT(INT, ROW_NUMBER() OVER (ORDER BY s1.[object_id]))

FROM sys.all_objects AS s1 CROSS JOIN sys.all_objects AS s2

OPTION (MAXDOP 1);

-- Set up on-disk tables

DROP TABLE IF EXISTS InMemoryTest.dbo.OldStage1;

GO

CREATE TABLE InMemoryTest.dbo.OldStage1

(

Id int,

Col1 uniqueidentifier,

Col2 uniqueidentifier,

Col3 varchar(1000),

Col4 varchar(50),

Col5 varchar(50),

Col6 varchar(50),

Col7 int,

Col8 int,

Col9 varchar(50),

Col10 varchar(900),

Col11 varchar(900),

Col12 int,

Col13 int,

Col14 bit

);

GO

CREATE CLUSTERED INDEX CL_Id ON InMemoryTest.dbo.OldStage1 (Id);

GO

-- Set up in-memory tables and natively compiled procedures

DROP TABLE IF EXISTS InMemoryTest.dbo.NewStage1;

GO

CREATE TABLE InMemoryTest.dbo.NewStage1

(

Id int,

Col1 uniqueidentifier,

Col2 uniqueidentifier,

Col3 varchar(1000),

Col4 varchar(50),

Col5 varchar(50),

Col6 varchar(50),

Col7 int,

Col8 int,

Col9 varchar(50),

Col10 varchar(900),

Col11 varchar(900),

Col12 int,

Col13 int,

Col14 bit

INDEX ix_id NONCLUSTERED HASH (id) WITH (BUCKET_COUNT=10)

)

WITH (MEMORY_OPTIMIZED=ON, DURABILITY=SCHEMA_ONLY);

GO

Few things to keep in mind:

- The tables have the same columns and datatypes, with the only difference being that the NewStage1 table is memory optimized.

- My database is using simple recovery so I am able to perform minimal logging/bulk operations on my disk-based table.

- Additionally, I'm using the SCHEMA_ONLY durability setting. This gives me outstanding performance because there is no writing to the transaction log! However, this means if I lose my in-memory data for any reason (crash, restart, corruption, etc...) I am completely out of luck. This is fine for my staging data scenario since I can easily recreate the data if necessary.

Inserting and deleting data

Next I'm going to create procedures for inserting and deleting my data into both my new and old staging tables:

DROP PROCEDURE IF EXISTS dbo.Insert_OldStage1;

GO

CREATE PROCEDURE dbo.Insert_OldStage1

@Id int,

@Rows int

AS

BEGIN

INSERT INTO InMemoryTest.dbo.OldStage1 (Id, Col1, Col2, Col3, Col4, Col5, Col6, Col7, Col8, Col9, Col10, Col11, Col12, Col13, Col14)

SELECT Id, Col1, Col2, Col3, Col4, Col5, Col6, Col7, Col8, Col9, Col10, Col11, Col12, Col13, Col14

FROM

(

SELECT

@Id as Id,

'92D14DA3-2C55-4E50-A965-7D3C941417B3' as Col1,

'92D14DA3-2C55-4E50-A965-7D3C941417B3' as Col2,

'aaaaaaaaaaaaaaaaaaaaaaaaaaaaaa' as Col3,

'aaaaaaaaaaaaaaaaaaaa' as Col4,

'aaaaaaaaaaaaaaaaaaaa' as Col5,

'aaaaaaaaaaaaaaaaaaaa' as Col6,

0 as Col7,

0 as Col8,

'aaaaaaaaaaaaaaaaaaaa' as Col9,

'aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa' as Col10,

'aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa' as Col11,

1 as Col12,

1 as Col13,

1 as Col14

)a

CROSS APPLY

(

SELECT TOP (@Rows) n FROM dbo.Numbers

)b

END

DROP PROCEDURE IF EXISTS dbo.Delete_OldStage1;

GO

CREATE PROCEDURE dbo.Delete_OldStage1

@Id int

AS

BEGIN

-- Use loop to delete to prevent filling transaction log

DECLARE

@Count int = 0,

@for_delete int,

@chunk_size int = 1000000

SELECT @for_delete = COUNT(Id) FROM InMemoryTest.dbo.OldStage1

WHERE Id = @Id;

WHILE (@Count < @for_delete)

BEGIN

SELECT @Count = @Count + @chunk_size;

BEGIN TRAN

DELETE TOP(@chunk_size) FROM InMemoryTest.dbo.OldStage1 WHERE Id = @Id

COMMIT TRAN

END

END;

GO

DROP PROCEDURE IF EXISTS dbo.Insert_NewStage1;

GO

CREATE PROCEDURE dbo.Insert_NewStage1

@Id int,

@Rows int

WITH NATIVE_COMPILATION, SCHEMABINDING

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

INSERT INTO dbo.NewStage1 (Id, Col1, Col2, Col3, Col4, Col5, Col6, Col7, Col8, Col9, Col10, Col11, Col12, Col13, Col14)

SELECT Id, Col1, Col2, Col3, Col4, Col5, Col6, Col7, Col8, Col9, Col10, Col11, Col12, Col13, Col14

FROM

(

SELECT

@Id as Id,

'92D14DA3-2C55-4E50-A965-7D3C941417B3' as Col1,

'92D14DA3-2C55-4E50-A965-7D3C941417B3' as Col2,

'aaaaaaaaaaaaaaaaaaaaaaaaaaaaaa' as Col3,

'aaaaaaaaaaaaaaaaaaaa' as Col4,

'aaaaaaaaaaaaaaaaaaaa' as Col5,

'aaaaaaaaaaaaaaaaaaaa' as Col6,

0 as Col7,

0 as Col8,

'aaaaaaaaaaaaaaaaaaaa' as Col9,

'aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa' as Col10,

'aaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa' as Col11,

1 as Col12,

1 as Col13,

1 as Col14

)a

CROSS APPLY

(

SELECT TOP (@Rows) n FROM dbo.Numbers

)b

END;

GO

DROP PROCEDURE IF EXISTS dbo.Delete_NewStage1;

GO

CREATE PROCEDURE dbo.Delete_NewStage1

@Id int

WITH NATIVE_COMPILATION, SCHEMABINDING

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'us_english')

DELETE FROM dbo.NewStage1 WHERE Id = @Id;

END

GO

Few more things to note:

- My new procedures are natively compiled: SQL Server compiles them up front so at run time it can just execute without any extra steps. The procedures that target my old disk-based tables will have to compile every time.

- In the old delete procedure, I am deleting data in chunks so my transaction log doesn't get full. In the new version of the procedure, I don't have to worry about this because, as I mentioned earlier, my memory optimized table doesn't have to use the transaction log.

Let's simulate a load

It's time to see if all of this fancy in-memory stuff is actually worth all of the restrictions.

In my load, I'm going to mimic loading three documents with around 3 million rows each. Then, I'm going to delete the second document from each table:

-- Old on-disk method

-- Insert data for processing

EXEC InMemoryTest.dbo.Insert_OldStage1 @Id=1, @Rows=2500000;

GO

EXEC InMemoryTest.dbo.Insert_OldStage1 @Id=2, @Rows=3400000;

GO

EXEC InMemoryTest.dbo.Insert_OldStage1 @Id=3, @Rows=2800000;

GO

-- Delete set of records after processed

EXEC InMemoryTest.dbo.Delete_OldStage1 @Id = 2

GO

-- New in-memory method

-- Insert data for processing

EXEC InMemoryTest.dbo.Insert_NewStage1 @Id=1, @Rows=2500000;

GO

EXEC InMemoryTest.dbo.Insert_NewStage1 @Id=2, @Rows=3400000;

GO

EXEC InMemoryTest.dbo.Insert_NewStage1 @Id=3, @Rows=2800000;

GO

-- Delete set of records after processed

EXEC InMemoryTest.dbo.Delete_NewStage1 @Id = 2

GO

The in-memory version should have a significant advantage because:

- The natively compiled procedure is precompiled (shouldn't be a huge deal here since we are doing everything in a single INSERT INTO...SELECT).

- The in-memory table inserts/deletes don't have to write to the transaction log (this should be huge!)

Results

-------------------- ---------------------------------------- -----------------------------------------

**Disk-based** **In-Memory**

INSERT 3 documents 65 sec 6 sec

DELETE 1 document 46 sec 0 sec

Total time 111 sec 6 sec

Difference -95% slower 1750% faster

-------------------- ---------------------------------------- -----------------------------------------

The results speak for themselves. In this particular example, in-memory destroys the disk-based solution out of the water.

Obviously there are downsides to in-memory (like consuming a lot of memory) but if you are going for pure speed, there's nothing faster.

Warning! I am not you.

And you are not me.

While in-memory works great for my ETL scenario, there are many requirements and limitations. It's not going to work in every scenario. Be sure you understand the in-memory durability options to prevent any potential data loss and try it out for yourself! You might be surprised by the performance gains you'll see.

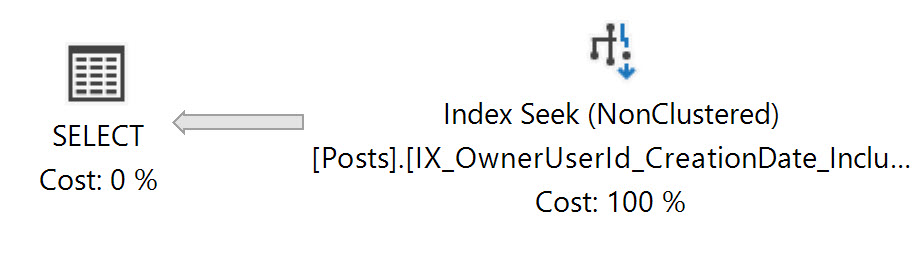

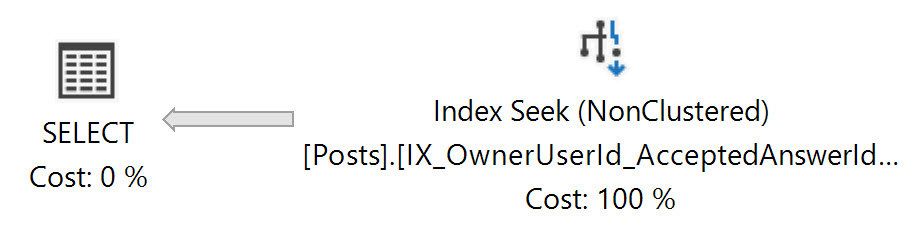

I wish all of my execution plans were this simple.

I wish all of my execution plans were this simple. This execution plan looks the same, but you'll notice the smaller, more data dense index is being used.

This execution plan looks the same, but you'll notice the smaller, more data dense index is being used.