With only a few days left in 2017, I thought it would be fun to do a year in review post. Below you'll find some of my top 5 favorites in a variety of SQL and non-SQL related categories. Hope you enjoy and I'll see you with new content in 2018!

Watch this week's video on YouTube

Top 5 Blog Posts

"Top 5" is totally subjective here. These aren't necessarily ordered by view counts, shares, or anything like that. These just happen to be my personal favorites from this year.

- How NOLOCK Will Block Your Queries

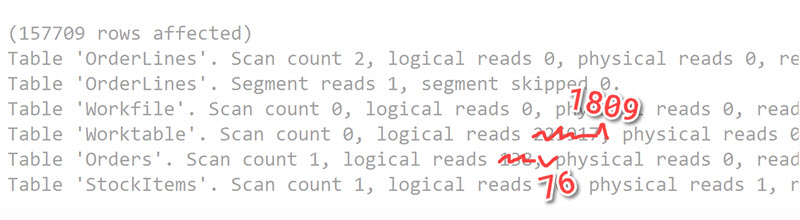

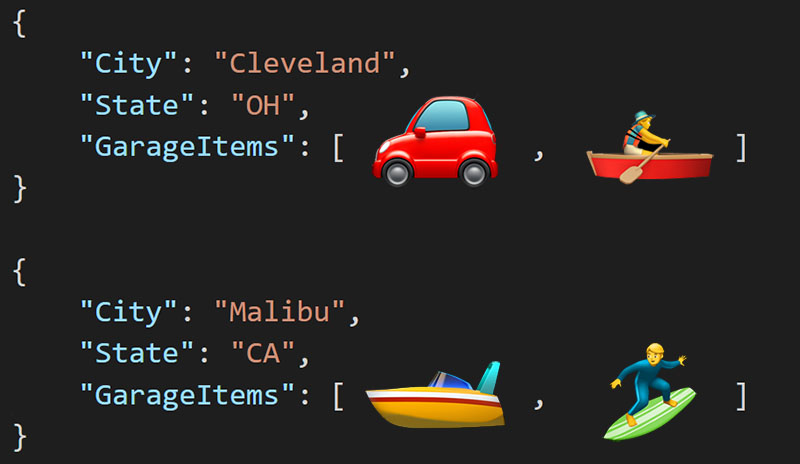

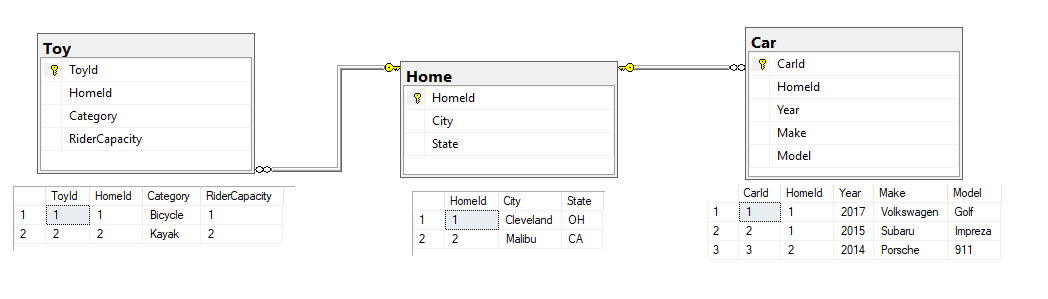

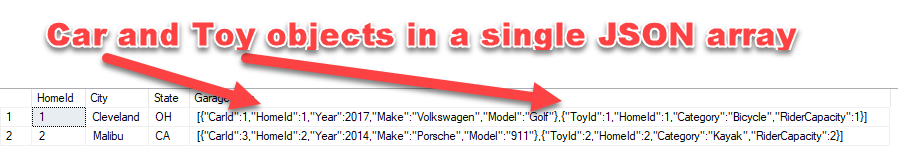

- One SQL Cheat Code For Amazingly Fast JSON Queries

- XML vs JSON Shootout: Which Is Superior In SQL Server 2016?

- ʼ;ŚℇℒℇℂƮ *: How Unicode Homoglyphs Can Thwart Your Database Security

- Are Your Indexes Being Thwarted By Mismatched Datatypes?

Top 5 Vlogs

You guys watched over 500 hours of my videos this year, thank you!

Watch this week's video on YouTube

Watch this week's video on YouTube

Watch this week's video on YouTube

Watch this week's video on YouTube

Watch this week's video on YouTube

I think the content of this last video is good, but I don't actually like anything else about it. Why did I bother including it then?

It's the first SQL Server vlog I made this year. I'm a little embarrassed by how bad it is, but I keep it up as motivation for myself to see how much I've improved since I started filming videos.

If you ever think you want to start doing something - just start doing it. Keeping track of progress and watching how you evolve is extremely rewarding.

Top 5 Posts That Never Got Written

I keep a list of post ideas. Here are 5 ideas I didn't get to this year.

I'm not ruling out ever writing them, but don't hold your breath. If you are ambitious, feel free to steal them for yourself - just let me know when you do because I'd love to read them!

- "ZORK! in SQL" - I actually think it would be really fun to program one of my favorite text based games in SQL Server. Don't get eaten by a grue!

- "How To Fly Under Your DBA's Radar" - this could really go either way: how to do sneaky things without your DBA knowing OR how to be a good SQL developer and not get in trouble with your DBA.

- "Geohashing in SQL Server" - Geohashes are really cool. It'd be fun to write about how to create them in SQL Server (probably a CLR, but it might be able to be done with some crazy t-sql).

- "A SQL Magic Trick"- from the age of 12-18 I worked as a magician. Sometimes I dream of teaching a SQL concept via a card trick. Don't rule this one out.

- "Alexa DROP DATABASE"- write an Alexa skill to manage your Azure SQL instance. I know this is technically feasible, I don't know how useful this would be.

Top 5 Tweets/Instagrams

I'm not a huge social media guy to begin with, but I do like sharing photos.

https://www.instagram.com/p/BTynN1gjTyj/

https://twitter.com/bertwagner/status/903712788538949633

https://twitter.com/bertwagner/status/894373268857212929

https://www.instagram.com/p/BP08U99j3Wh/

https://twitter.com/bertwagner/status/892717494166802432

Top 5 Catch-All

These are some of the random things that helped me get through the year.

- Red Bird Coffee. This is premium coffee at affordable prices. The Ethiopian Aricha is the best coffee I've ever had - tastes like red wine and chocolate.

- Vulfpeck's The Beautiful Game - I listened to this album more than any other to get into a working groove. So funky.

- Pinpoint: How GPS Is Changing Technology, Culture, and Our Minds - This was probably my favorite book of the year. If you ever wonder how GPS works, or the implications of a more connected world, this book was absolutely fascinating.

- LED Lighting Strips - I put these behind my computer monitors to create some nice lighting for filming, but I've found myself leaving them on all the time because they add a nice contrasting back light to my screens.

- BONUS! Mechanical Keyboard - This thing is inexpensive, but amazing. I didn't realize what I was missing out until I started typing on it. The sound of the clacking keys brought on an immediate flashback to the 1990s when I last had a mechanical keyboard. I don't know if it allows me to type faster like many users claim, but I am definitely happier typing on it. CLACK! CLACK! CLACK!

A cup of coffee and a shot of espresso might have the same caffeine content - espresso is just more caffeine dense, just like the data stored in a narrow index. Photo by

A cup of coffee and a shot of espresso might have the same caffeine content - espresso is just more caffeine dense, just like the data stored in a narrow index. Photo by