I do my best work in the mornings. Evenings are pretty good too once I get a second wind.

Late afternoon are my nemesis for getting any serious technical or creative work done. Usually I reserve that time for responding to emails, writing documentation, and brewing coffee.

Some afternoons I can't help myself though and end up getting myself into trouble.

What is THAT!?

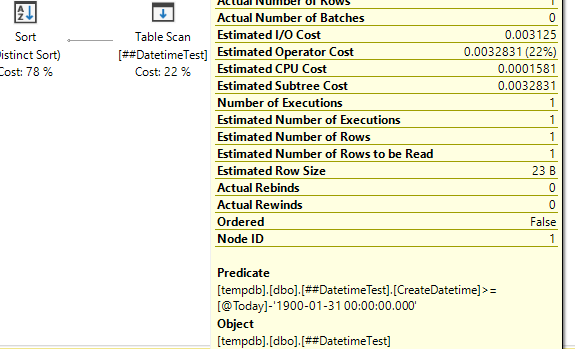

It all started when I was troubleshooting an existing query that was having issues. During the process of trying to understand what the query was doing, I happened to look at the execution plan:

CREATE TABLE ##DatetimeTest ( SomeField varchar(50) NULL, CreateDatetime datetime);

INSERT INTO ##DatetimeTest VALUES ('asdf',GETDATE());

DECLARE @Today datetime = GETDATE();

SELECT DISTINCT

*

FROM

##DatetimeTest

WHERE

CreateDatetime >= @Today-30;

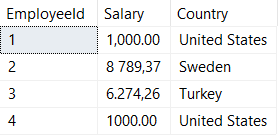

When I hovered over the Table Scan, the Predicate section caught my eye. Specifically, I wondered "Why is 1900-01-31 showing up? I don't have that anywhere in my query!"

(fun side story: the real query I was working on was dealing with user-defined datatypes, something I don't have experience with, so I thought those had something to do with the problems I was experiencing. I latched onto this 1900 date as the potential cause but it ended up being a red herring!)

Help!

Like I mentioned, late afternoons are not when I do my best work. I couldn't figure out why SQL Server was converting my -30 to January 31, 1900.

Intrigued and having no clue what was going on, I decided to post the question with the #sqlhelp hashtag on Twitter. Fortunately for me, Aaron Bertrand, Jason Leiser, and Thomas LaRock all came to the rescue with ideas and answers - thanks guys!

Implicit Conversion

In hindsight, the answer is obvious: the -30 implicitly converts to a datetime (the return type of my @Today variable), in this case 30 days after the start of the minimum datetime value, 1900-01-01.

This makes perfect sense: SQL Server needs to do some math and in order to do so it first needs to make sure both datatypes in the equation match. Since int readily converts to datetime but not the other way around, SQL Server was just doing its job.

Future Problems

As I mentioned earlier, this int to datetime conversion wasn't the actual issue with my query - in my drowsy state I mistook it as being the source of my problem.

And while it wasn't a problem this time, it can become a problem in the future.

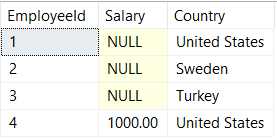

Aaron has an excellent article on the problems with shorthand date math, but the most relevant future issue with my query is: what if someone in the future decides to update all datetimes to datetime2s (datetime2 being Microsoft's recommended datatype for new work)?

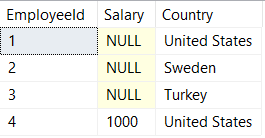

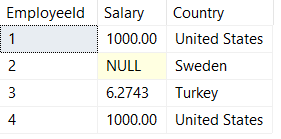

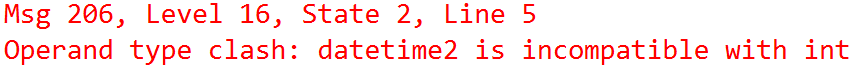

If we update to a datetime2s and run the query again:

ALTER TABLE ##DatetimeTest

ALTER COLUMN CreateDatetime datetime2;

DECLARE @Today datetime2 = GETDATE();

SELECT DISTINCT

*

FROM

##DatetimeTest

WHERE

CreateDatetime >= @Today-30;

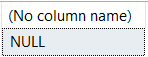

AHHH! While SQL Server had no problem converting our previous code between datetime and int, it's not so happy about converting datetime2.

Morals

In the end, the above scenario had nothing to do with the actual problem I had on hand (which had to do with some operator precedence confusion).

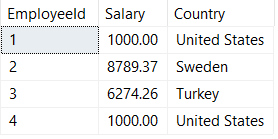

To avoid future confusion and problems it's still better to refactor the code to be explicit with what you want to do by using the DATEADD() function:

DECLARE @Today datetime = GETDATE();

SELECT DISTINCT

*

FROM

##DatetimeTest

WHERE

CreateDatetime >= DATEADD(day, -30, @Today);