Watch this week's video on YouTube

SQL Server's cost-based query optimizer does a pretty good job of figuring out what order to filter your data to get fast query executions. It considers things like index coverage, data distribution, and much more to decide how to retrieve your query's data.

However, these good intentions can become problematic in certain situations where you know more about your data than SQL Server does. When this happens, the order SQL Server chooses to execute predicates is important not just for performance of your query but for the business logic as well.

A Column With Mixed Data Types

Let's look at the following example table and data:

USE master;

DROP DATABASE IF EXISTS MixedDataTypes;

CREATE DATABASE MixedDatatypes;

USE MixedDatatypes;

GO

CREATE TABLE dbo.Pages

(

Id int identity,

PageName varchar(20),

DataValue varchar(100),

DataType varchar(20),

CONSTRAINT PK_Id PRIMARY KEY (Id)

);

GO

INSERT INTO dbo.Pages VALUES ('StringsOnlyPage 1','abc','string')

GO 2000

INSERT INTO dbo.Pages VALUES ('NumbersOnlyPage 1','1.20','decimal')

GO 2000

INSERT INTO dbo.Pages VALUES ('NumbersOnlyPage 2','1.20','decimal')

GO 2000

INSERT INTO dbo.Pages VALUES ('MixedDataTypesPage 1','abc','string')

GO 1000

INSERT INTO dbo.Pages VALUES ('MixedDataTypesPage 1','1.20','decimal')

GO 1000

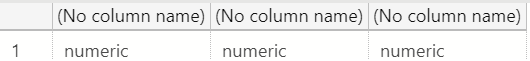

This table stores data for an application that has many different types of Pages. Each Page stores different types of data, but instead of creating a separate table for each type, we store all the different data in the varchar DataValue column and maintain the original data type in the DataType column.

This structure reduces the complexity required for maintaining our database (compared to creating possibly hundreds of tables, one for each PageName) and makes querying easier (only need to query one table). However, this design could also lead to some unexpected query results.

Filtering Mixed Data Values

Let's say we want to retrieve all data from one table with where the value is 1.2:

SELECT PageName,DataValue

FROM dbo.Pages

WHERE PageName = 'NumbersOnlyPage 1' AND DataValue = '1.2'

This query runs fine. The problem is since our original data type was a decimal with a value of 1.20, this string-based comparison doesn't work. What we really want to have happen is a numeric comparison in our predicate:

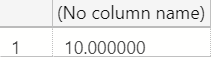

SELECT PageName,DataValue

FROM dbo.Pages

WHERE PageName = 'NumbersOnlyPage 1' AND DataValue = 1.2

While the implicit conversion occurring on the table's DataValue column is not ideal, if the number of rows it needs to convert is small it's not so bad (plus, this isn't the point of today's post, so try and look past it for a few more moments).

Here comes the fun: what if we want to check all our Pages that contain numeric data for values of 1.2? We could write this query in a couple of different ways:

SELECT PageName,DataValue

FROM dbo.Pages

WHERE PageName like 'NumbersOnlyPage%' AND DataValue = 1.2

--or

SELECT PageName,DataValue

FROM dbo.Pages

WHERE PageName in ('NumbersOnlyPage 1','NumbersOnlyPage 2') AND DataValue = 1.2

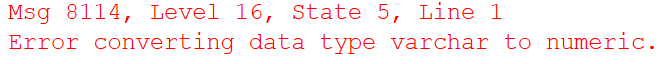

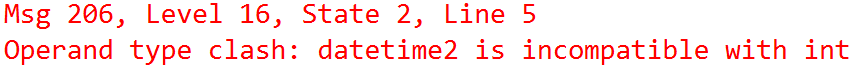

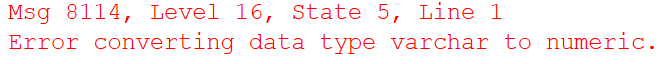

For both queries, we receive the error "Error converting data type varchar to numeric".

Why? In this case SQL Server decides to do the implicit conversions on the DataValue column first before filtering on our PageName columns.

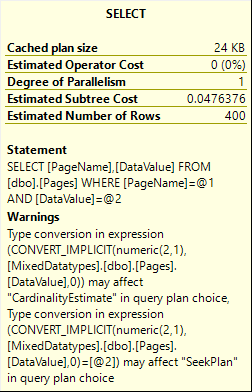

Up until this last query, SQL Server was deciding that it would be more efficient to filter the rows down to the specific Page first and then do the implicit conversions on the DataValue column. However, now that we are selecting more than one table, SQL Server says determines it has to scan everything anyway, it might as well do all of the implicit conversions first and filter on table names later.

The problem of course is that all our DataValue values are not numeric. In this case the order of the predicates does matter, not for performance but to be able to correctly execute the business logic that we defined as part of our query.

Not Good Solutions

One way we can fix this is to tempt SQL Server to filter on PageName first by adding an index:

CREATE NONCLUSTERED INDEX IX_PageName ON dbo.Pages (PageName) INCLUDE (DataValue);

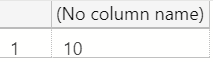

SELECT PageName,DataValue

FROM dbo.Pages

WHERE PageName like 'NumbersOnlyPage%' AND DataValue = 1.2

This works great. SQL Server decides that since this index covers all the fields in our query, and because the index key is PageName, it will filter the rows on PageName first and perform the implicit conversions on the remaining rows.

The problem with this is that it's not guaranteed. Something may happen that will cause SQL Server not use this index in the future: our index doesn't cover our query anymore, we add some additional filtering, the index is removed so it can be replaced by a different index that will no longer be selected for this particular query, etc...

It just isn't a reliable option.

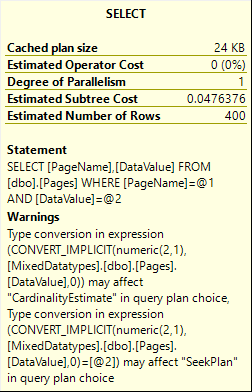

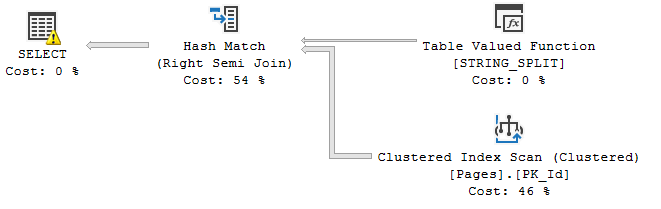

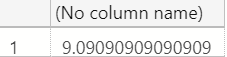

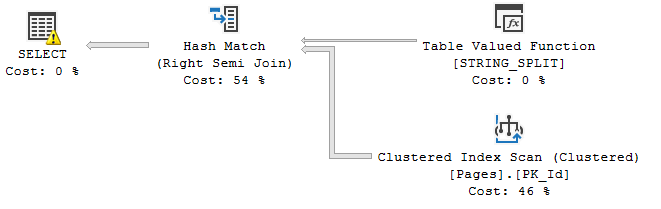

Plus it doesn't work in all scenarios. Let's say we parameterize the PageName and use the STRING_SPLIT() function to filter our Pages to only those passed in:

DECLARE @PageNames varchar(100) = 'NumbersOnlyPage 1,NumbersOnlyPage 2';

SELECT *

FROM dbo.Pages

WHERE PageName in (SELECT value FROM string_split(@PageNames,',')) AND DataValue = 1.2

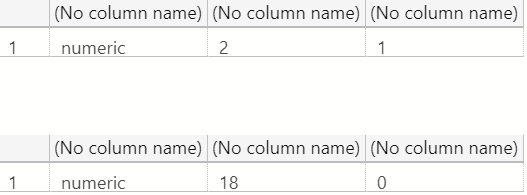

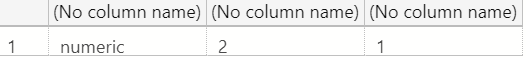

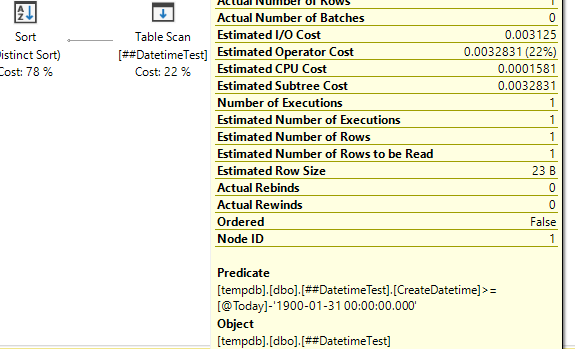

We are back to square one since in this case STRING_SPLIT() needs to parse the PageName data first and then join it in with the rest of the data, causing our original failure scenario (this is the estimated execution plan):

Other Options

So while indexing seems to fix the solution, it's not guaranteed to work 100% of the time.

Obviously we could not store data in this format, but that would add complexity to the database and app.

We could try to add the PageName filter into a derived table and force the join order, but that's ugly and will force us to read the table multiple times.

Since we also have data type information available for each row, we might consider utilizing that information:

SELECT PageName,DataValue

FROM dbo.Pages

WHERE PageName like 'NumbersOnlyPage%' AND DataValue = 1.2 AND DataType = 'decimal'

But once again if this works it's through sheer luck.

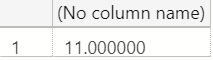

TRY_CONVERT() is another option. This function returns nulls if it can't convert to a decimal:

SELECT PageName,DataValue

FROM dbo.Pages

WHERE PageName like 'NumbersOnlyPage%' AND TRY_CONVERT(decimal(2,1),DataValue) = 1.2

This is actually a pretty good option since it's guaranteed to work regardless of which column SQL Server filters on first. If the number of DataValues you have to TRY and CONVERT is relatively small though, this may be your best choice.

For better performance, you can create a second column that contains data in decimal (or any other type) format:

ALTER TABLE dbo.Pages

ADD DataValueDecimal AS TRY_CONVERT(decimal(2,1),DataValue) PERSISTED

You could index both DataValue* columns and your performance would be pretty good. The downside here of course is that your app queries will have to change to match the new table structure:

SELECT PageName,DataValue

FROM dbo.Pages

WHERE PageName like 'NumbersOnlyPage%' AND CASE WHEN DataType = 'decimal' THEN DataValueDecimal ELSE DataValue END = 1.2

In conclusion, it's tough to say what the best option is for this type of scenario. However, it's important to keep in mind that if you decide to structure and write your queries in this format, you need to plan for order of operation issues and handle errors gracefully.