This post is a response to this month's T-SQL Tuesday #107 prompt by Jeff Mlakar. T-SQL Tuesday is a way for the SQL Server community to share ideas about different database and professional topics every month.

This month's Halloween themed topic asks to "... share a story about a project you worked on or were impacted by that went horribly wrong."

Watch this week's video on YouTube

I've been fortunate enough to never have been part of a large disastrous project at work. My projects always have a "fail fast" mentality, so they never build up to a point where they come crashing down in a death spiral.

But that's not to say I haven't experienced my own project horror story in my personal work.

A while back I made a goal to produce a quality SQL Server focused blog post and video every week. Essentially this means I am starting a new small-scale project each week where I play the part of project manager, developer, analyst, etc... with a delivery deadline of every Tuesday morning. While I've gotten better at this process over time, I have also failed to meet my personal goals numerous for a variety of reasons.

Scope Creep-y

In order to meet my weekly deadline, I need to stay laser focused on the topic I choose for that particular week. If I get additional ideas while writing and start trying to incorporate them into my post (ie. scope creep), I inevitably miss midweek milestones and have to try to make up time elsewhere to make my deadline.

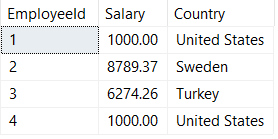

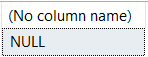

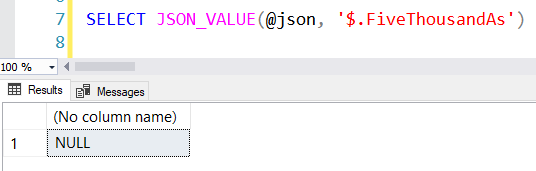

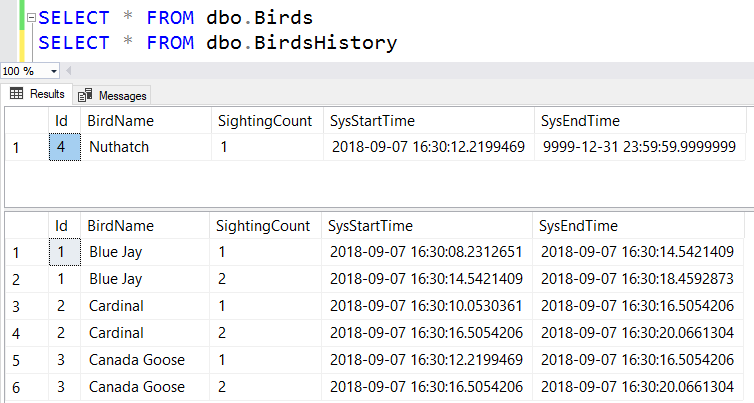

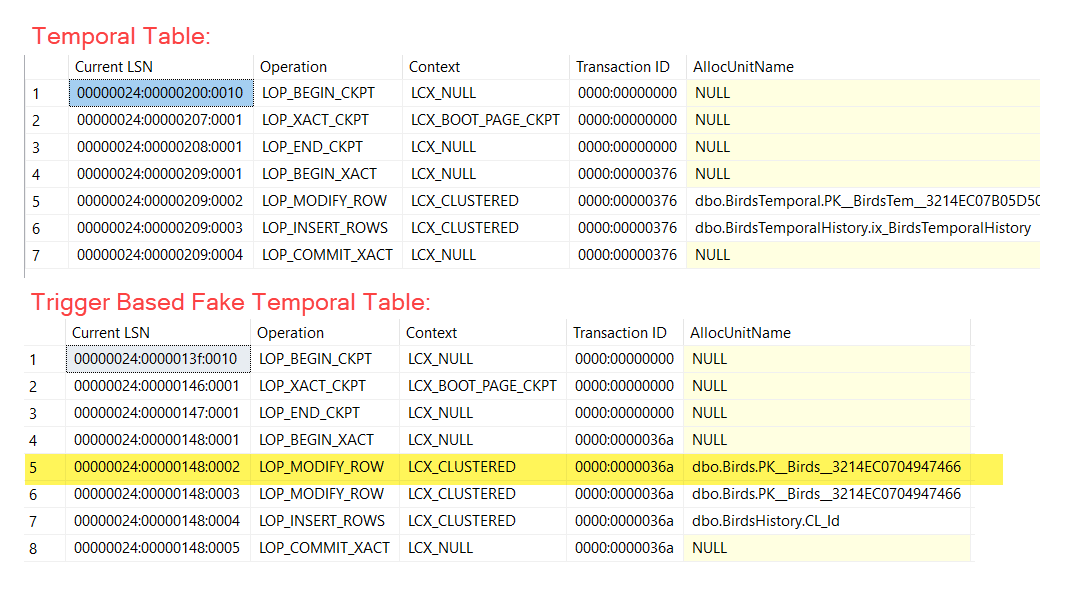

One instance of scope-creep I experienced earlier this year was when I was trying to write a post on how to build a table-driven validation system.

I've built many table-driven processes in the past so this seemed like it would be an easy topic to write about. I started that week's blogging process by building the demo templates that would include table structures, execution scripts, etc...

Instead of wrapping up my basic demos so I could move on to writing the actual post, I kept building out demos for more features: logging functions, parameterization, SQL injection protection, common performance problems, etc...

It was exciting to be building all of this out, but instead of creating one-week's content, I realized I had started working on enough demos for several weeks of posts. This wouldn't have necessarily been a bad thing on its own; after all it's nice to be a few weeks ahead on content creation.

However, I didn't quite finish enough demos for any one post in particular, and due to some other life events I didn't get back to working on my demos until Sunday afternoon. Normally at that point I'd already have my demos done, a blog post written, a video filmed, and either a finished video edit that I'm uploading or getting really close to uploading to YouTube. What I had instead was a bunch of half-finished SQL demos saved in a very rough outlined blog post.

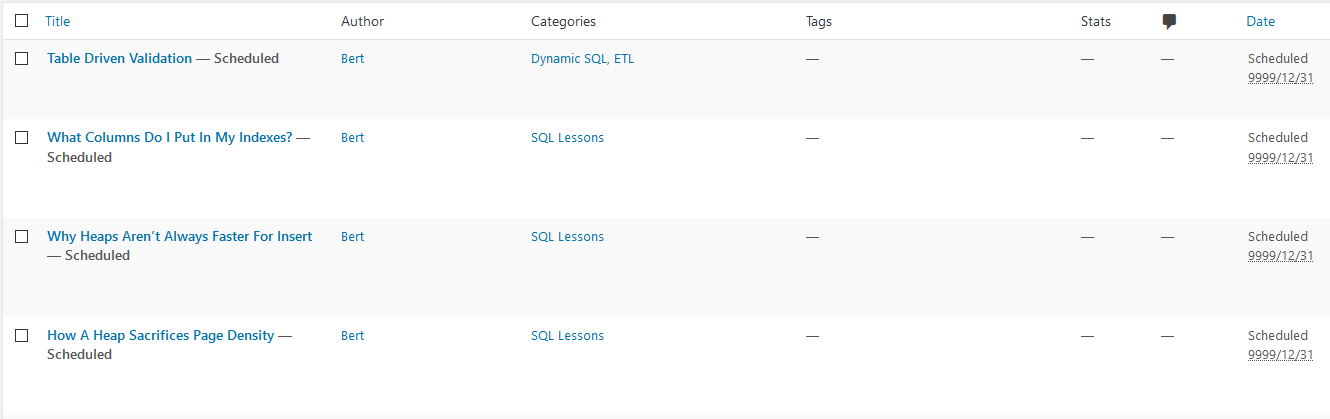

The Project Graveyard

This isn't the first time poor time management and scope creep has gotten me in trouble:

I have several posts that I've invested a good amount of time into but never released because they are incomplete. In almost all of these cases my problems stemmed from poor planning and scope creep.

In the case of my table-driven post, by late-Sunday afternoon I realized I was going to miss my weekly deadline goal if I continued with that post, so I scrapped the idea for now and quickly wrote and shot a different post on an SSMS trick instead. It was discouraging to have to do that, but at the end of the day I was able to meet my weekly deadline even if it was with a different result than I initially expected.

You might be thinking, "Why not ignore deadlines and release the post later in the week/month?" For me, I like my weekly deadlines because I like the creative challenges that come from having time constraints. It forces me to limit my scope and work on different projects on a regular basis. My goal from blogging and video making is to learn how to present information in a succinct manner so that my communication skills, both written and verbal, improve. So while I can (and probably will) complete these posts at some point in the future, I treat them as failures for that particular week's project.

And while failures aren't particularly fun, they can wind up being great learning opportunities: after all, I haven't gotten so off track due to scope creep ever since.